1. Introduction

Epilepsy is a neurological disorder with a worldwide prevalence of 0.8% to 1.2%, where 20% to 30% of cases are untreatable with Anti-Epileptic Drugs (AED) [1,2]. For those patients, a valuable treatment is a surgical intervention [3], with a success rate ranging from 30% to 70% [4].

The success of the surgical intervention depends on a precise localization of the epileptogenic tissue. Diagnostic techniques, including but not limited to Stereotactic Electroencephalography (SEEG), play a crucial role in achieving this accuracy [3,5,6]. The SEEG measures the electric signal within the brain areas using deep electrodes, guiding the implantation and electrode localization with Magnetic Resonance Imaging (MRI) and Computer Tomography (CT) images. However, given the limited structural details in CT images, a fusion with an MRI is required. This fusion ensures a comprehensive representation of both anatomical structures and electrode positions in a unified image [7,8,9].

Image fusion is a processing technique that involves mapping images into a common coordinate system and merging the aligned results into a single output. Numerous methods are available for image fusion; however, the performance of each technique is influenced by characteristics related to the acquisition and image type [10,11]. When external objects are present in an SEEG sequence, it may interfere with the registration process, which relies on similarity metrics computed using voxel data between images [10,11]. Consequently, changes in the structural data of the CT image can affect the calculation of similarity metrics, leading to misregistration in the fused images.

Based on the challenges associated with image registration, we conducted a systematic review, using the methodology outlined by Kitchenham [12] for literature reviews in software engineering. Our research looked into the techniques and tools used for brain image fusion between CT and MRI, as well as the validation techniques employed to measure the performance [13]. Our review revealed a notable absence of a standard method for image fusion validation in CT and MRI, especially when external objects are present. Furthermore, we identified a significant lack of validation methodologies for these techniques. This is particularly concerning given that the Retrospective Image Registration Evaluation Project (R.I.R.E.), once a standard methodology, is no longer in use. Our review also highlighted the importance of understanding the performance of various image fusion techniques in applications like SEEG that involve external objects. We found that methods using Mutual Information (MI) as the optimization metric exhibited superior performance in multimodal image fusion.

These challenges were evident in SEEG examinations conducted at Clinica Imbanaco Grupo Quironsalud in Cali, Colombia, where we identified registration errors in the fusion of MRI and CT images primarily attributed to the presence of electrodes. These inconsistencies required manual adjustments to correctly align the misregistered MRI images. In response to these challenges, we introduce an image fusion method that accounts for external elements, primarily in exams like SEEG. It is crucial to note that while our method is designed to mitigate the impact of external objects in the images and enhance the spatial accuracy of electrode localization, it does not explore into the analysis of electric signals from the deep electrodes. Such an analysis falls outside the scope of this study and would demand a distinct analytical framework.

2. Fusion Method

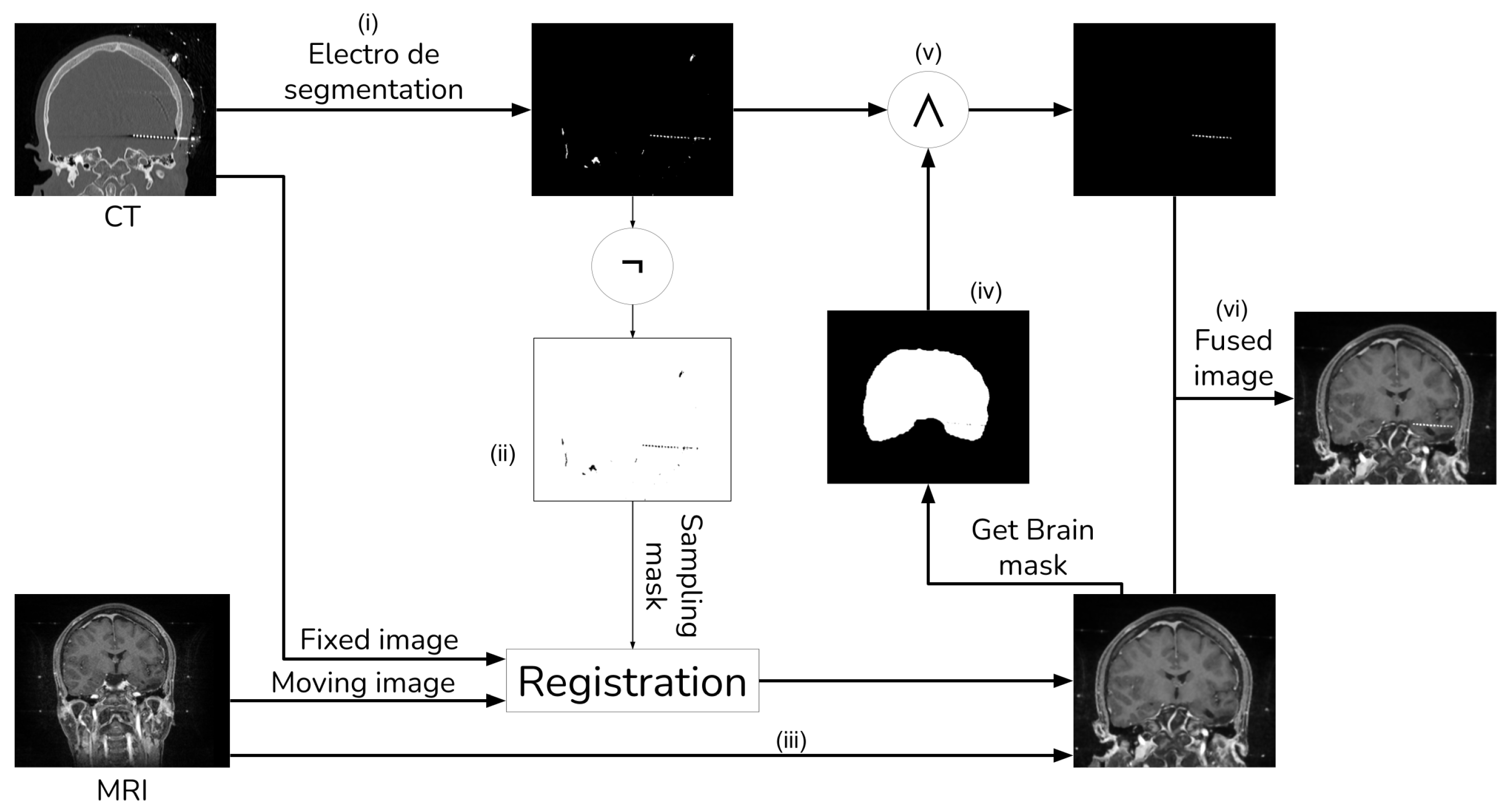

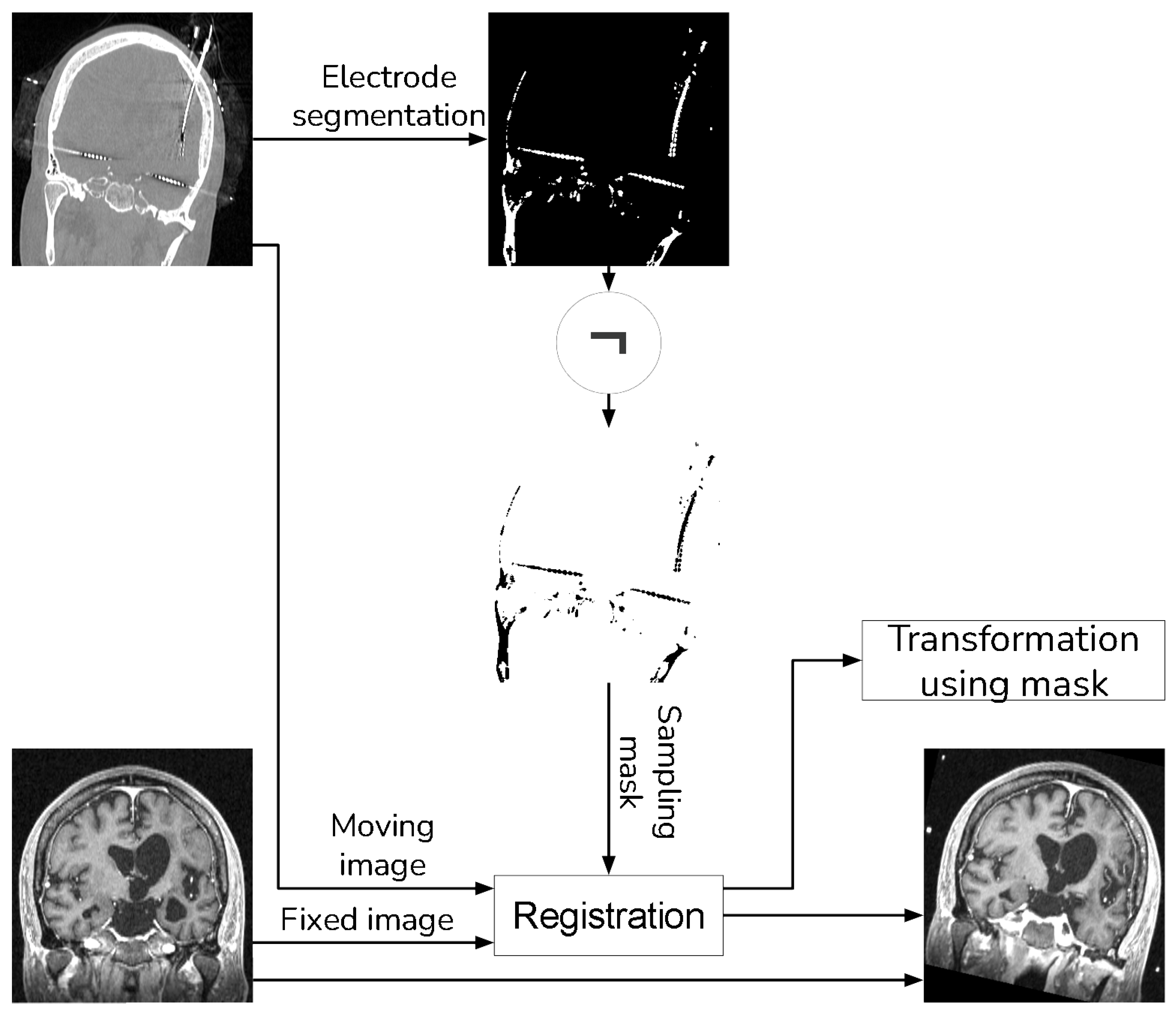

The general procedure shown in Figure 1 consists of seven main steps: (i) initial electrode segmentation in the CT image; (ii) generation of a mask of all non-electrode voxels in the CT image; (iii) registration of the MRI against the CT image using the non-electrode sampling mask to compute the transformation; (iv) segmentation of the brain from the registered MRI with the ROBEX tool, and subsequently computing a brain mask; (v) improving the electrode segmentation using the brain mask obtained from the previous step; (vi) integrate the fully segmented electrodes with the registered MRI.

Figure 1. Image fusion method with external object.

2.1. CT Electrode Segmentation

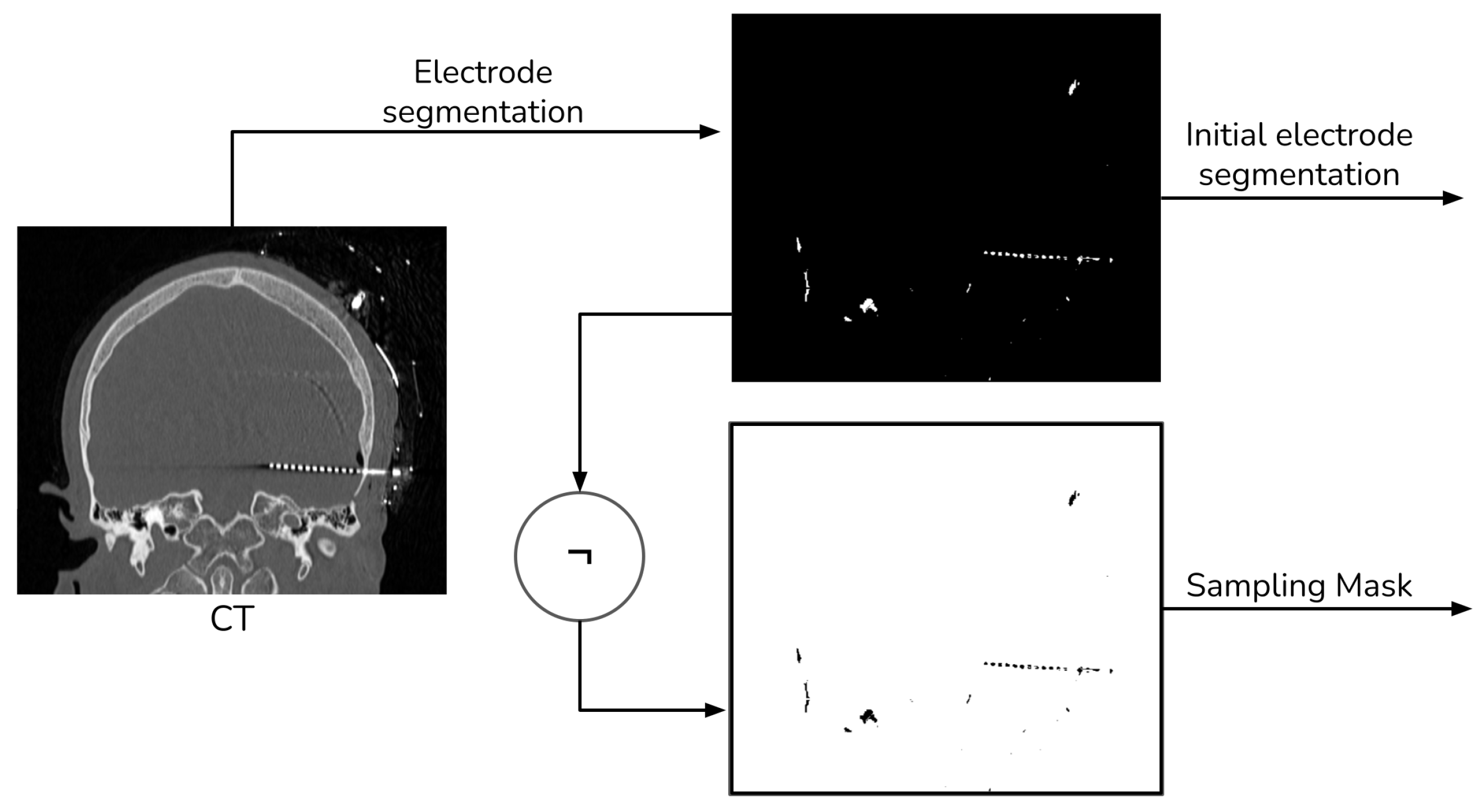

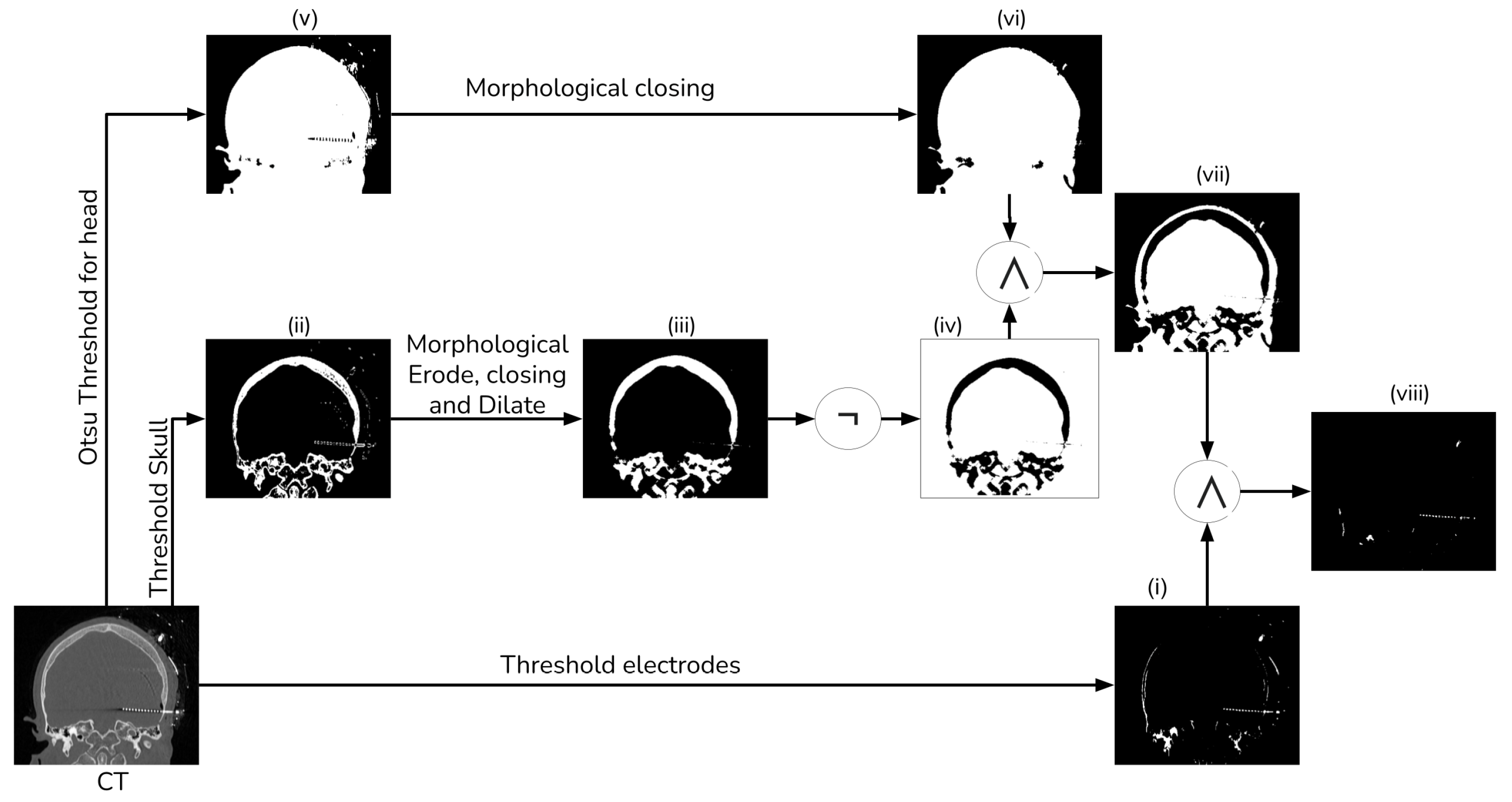

Given that our literature search did not identify any fusion methods that take into account the electrode and utilize segmentation for registration [13], we devised our own segmentation procedure. This procedure employs thresholding and morphological operations, as shown in Figure 2 and Figure 3.

Figure 2. Electrode segmentation general procedure.

Figure 3. Electrode segmentation detailed procedure.

To extract the electrodes from the CT, we employed simple thresholding with a window of 1500 HU to 3000 HU (i). Subsequently, we computed a mask of the head tissue to remove the skull from the segmented image (viii). Next, we generated a brain mask for the MRI, which had been aligned with the CT, utilizing the ROBEX (Robust Brain Extraction) tool, which is a stripping method based on the work of Iglesias et al. [14]. Finally, we applied this mask to the registered electrodes to remove any objects situated outside the brain.

To segment the skull, we employed a simple threshold with a window ranging from 300 HU to 1900 HU (ii). Subsequently, we employed a morphological eroding technique with a cross kernel of size to eliminate the electrodes from the skull. Next, a morphological closing and dilation operation with a ball kernel of size was performed to connect all the bone tissue (iii). Finally, we applied a NOT operator to generate a mask of no-skull gray voxels (iv).

We generate a head mask using Otsu’s thresholding (v) to exclude any object external to the head [15]. After that, we apply a morphological hole-filling operation to remove any internal gap within the head (vi). Then, we create a brain mask by intersecting the head mask and the no-skull mask (vii). Finally, we combine the brain mask with the thresholding electrodes, ensuring the removal of most of the skull tissue (viii).

2.2. MRI Registration

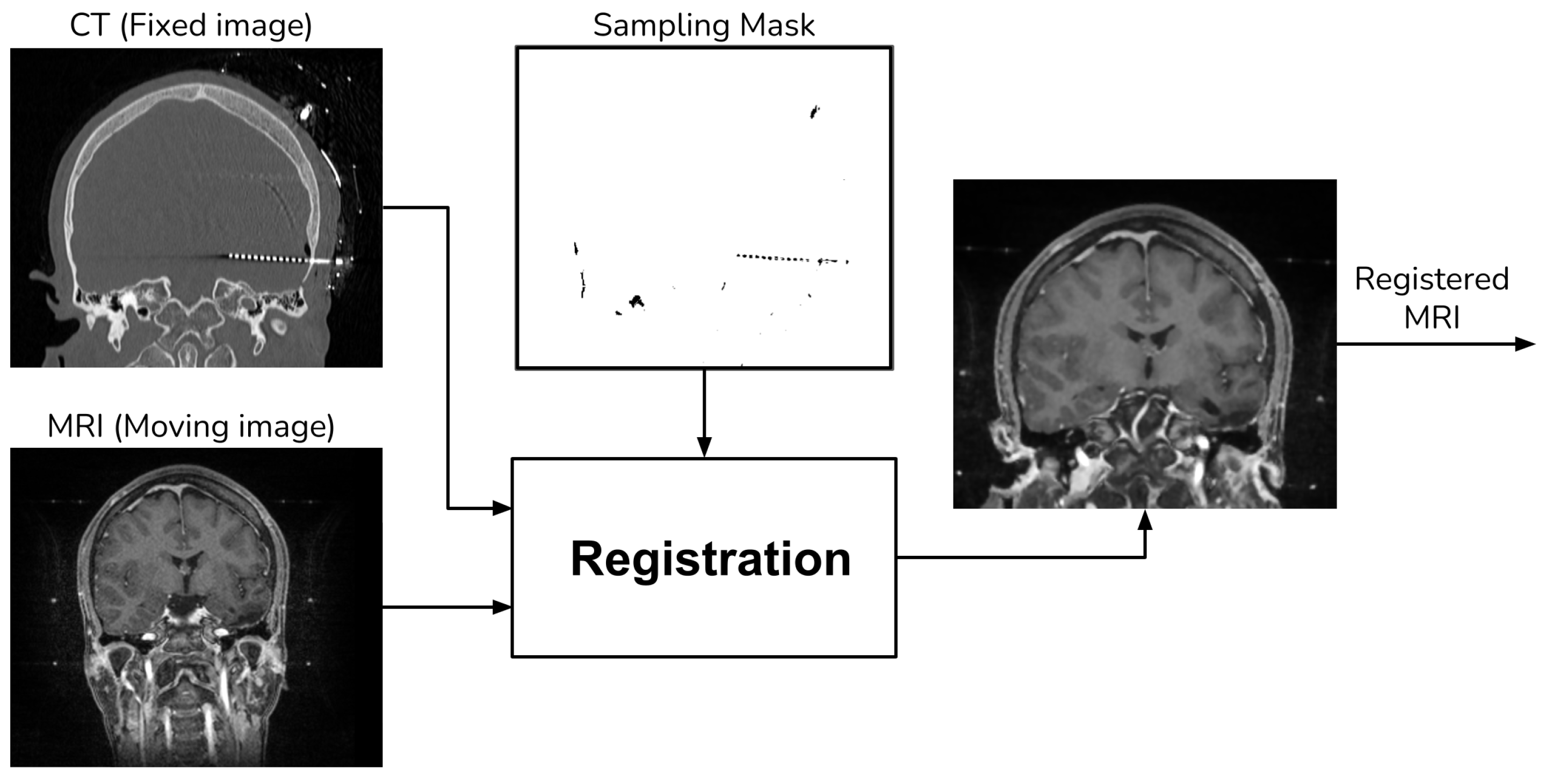

For MRI registration, we employed an affine rigid transformation combined with a gradient descent algorithm, using Mutual Information (MI) as the similarity metric. We opted for this registration approach because MI is based on the normal probability distribution between images [10,11], which has been shown to be more effective in multi-modal registration [13]. To enhance the registration process, we add a unique step that uses a sampling of the voxels that do not contain electrodes when computing the MI. We achieve this by creating a mask through the application of a NOT operation to the segmented electrodes, as detailed in Section 2.1. Figure 4 shows a schema of the registration procedure.

Figure 4. Registration procedure.

2.3. Final CT Electrode Segmentation

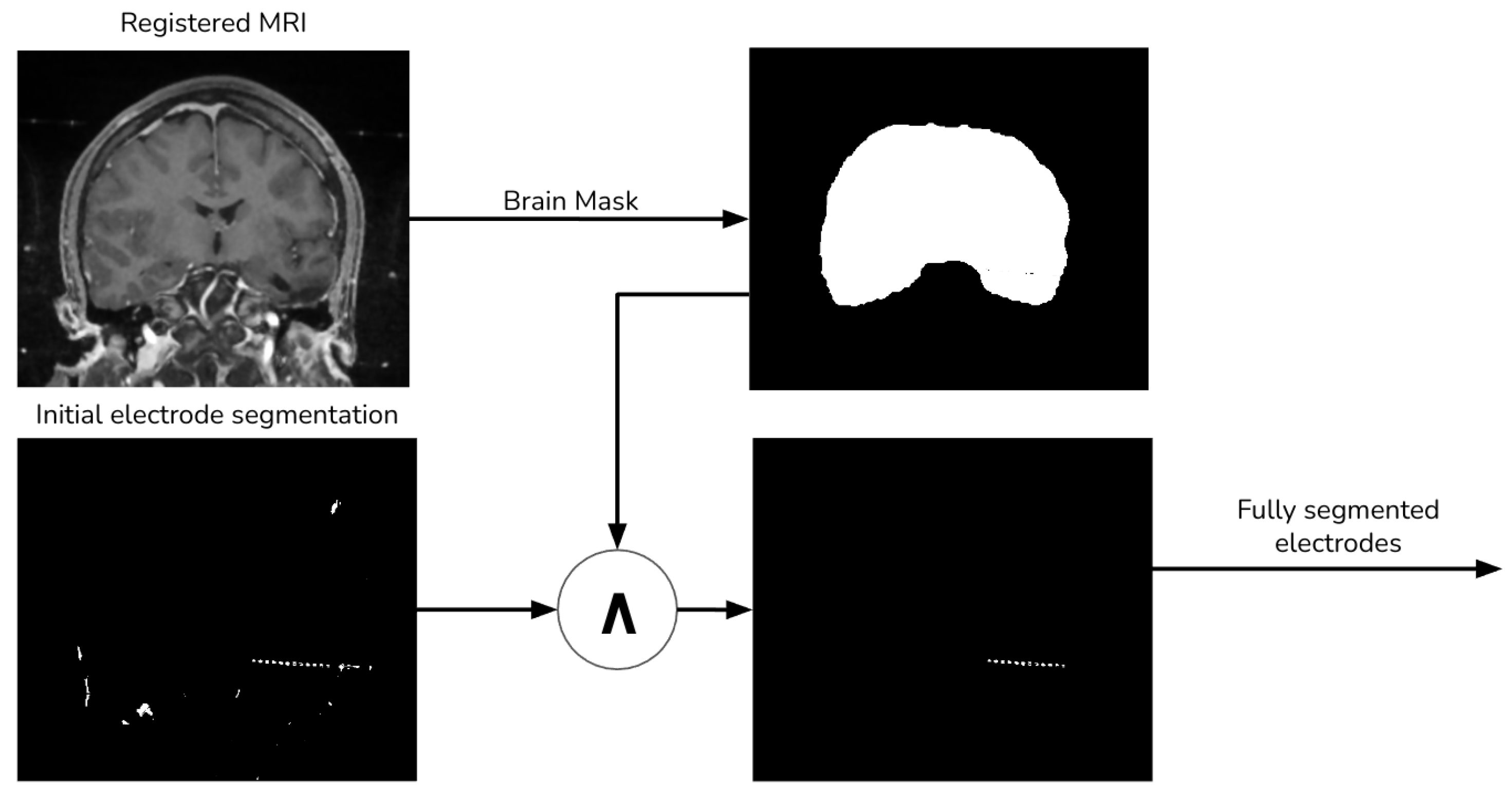

The preliminary electrode segmentation employs both thresholding and morphological operations. However, this approach might segment some bone tissue alongside the electrodes, which must be excluded from the final image. To address this, we use the aligned MRI to generate a brain mask with the aid of the ROBEX tool, as depicted Figure 5.

Figure 5. Final electrode procedure.

2.4. Image Merging

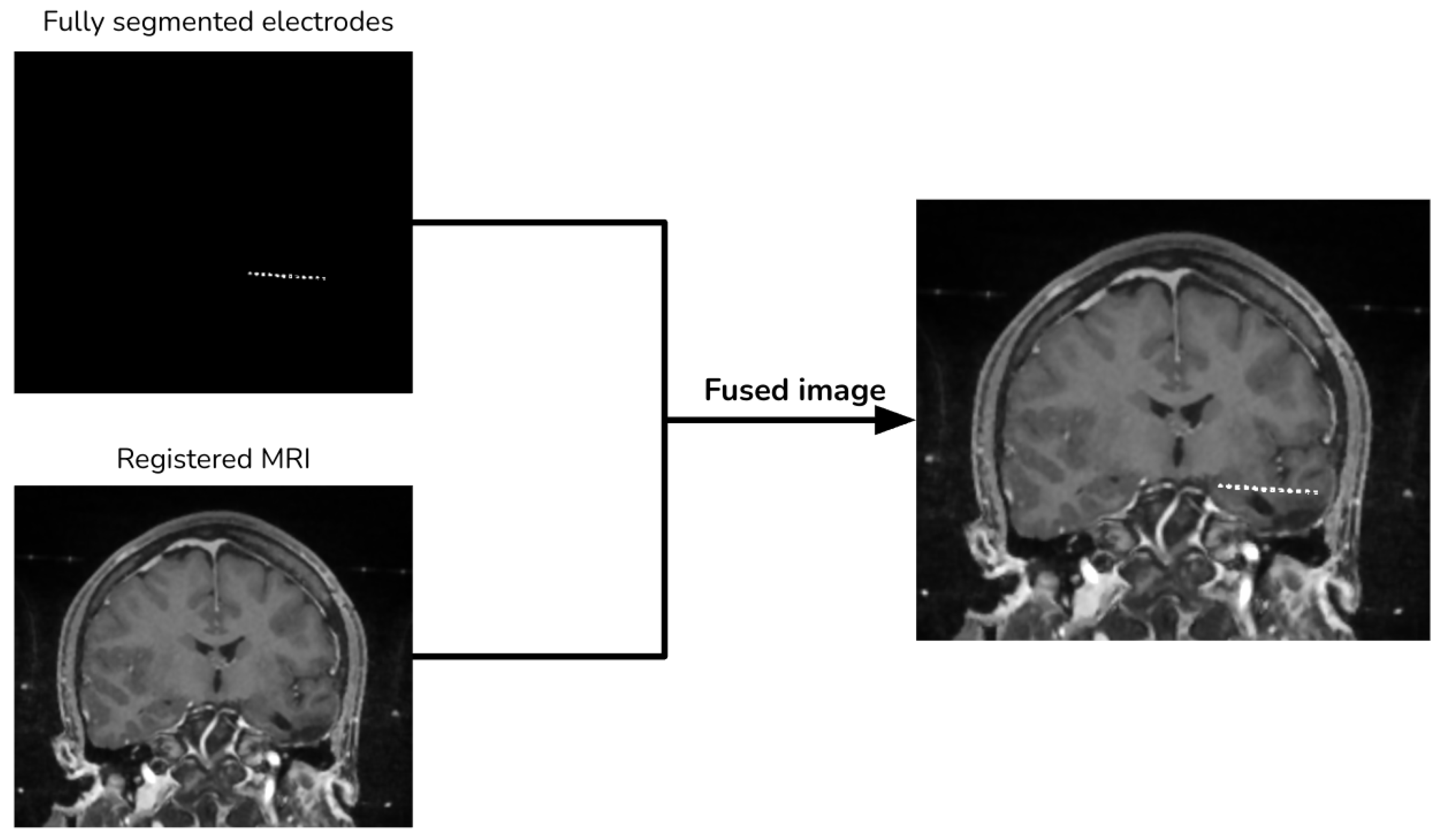

Finally, we add the segmented electrodes to the aligned MRI to produce the fused image (Figure 6).

Figure 6. Image merging procedure.

3. Validation Method

Given the lack of a standardized validation methodology for multi-modal image fusion, as highlighted in our 2021 literature review [13], we opted to employ two distinct methods to validate our proposed technique. Initially, we employ the RIRE dataset to generate synthetic data. Then, for our second validation, we used four pairs of MRI and CT images from SEEG exams, measuring the performance by identifying five anatomical structures in the CT and MRI.

3.1. Validation Using RIRE Dataset

We selected eight images from the RIRE dataset containing both MRI and CT images. To simulate the presence of electrodes, we introduced cylinders into the CT images. Subsequently, we performed a rigid registration on the images without electrodes. The transformation obtained from this registration served as a reference for further analysis.

Furthermore, to measure the performance, we compared the location of brain structures in the registered images. This comparison was conducted using the Euclidean distance between the reference structure in the CT and the corresponding structure in the registered MRI.

3.2. Validation with Brain Structures

We measure the performance of the fusion method by using the subsequent brain structures in the CT image as reference points: (i) the Sylvian aqueduct; (ii) the anterior commissure; (iii) the pineal gland; (iv) the right lens; (v) the left lens.

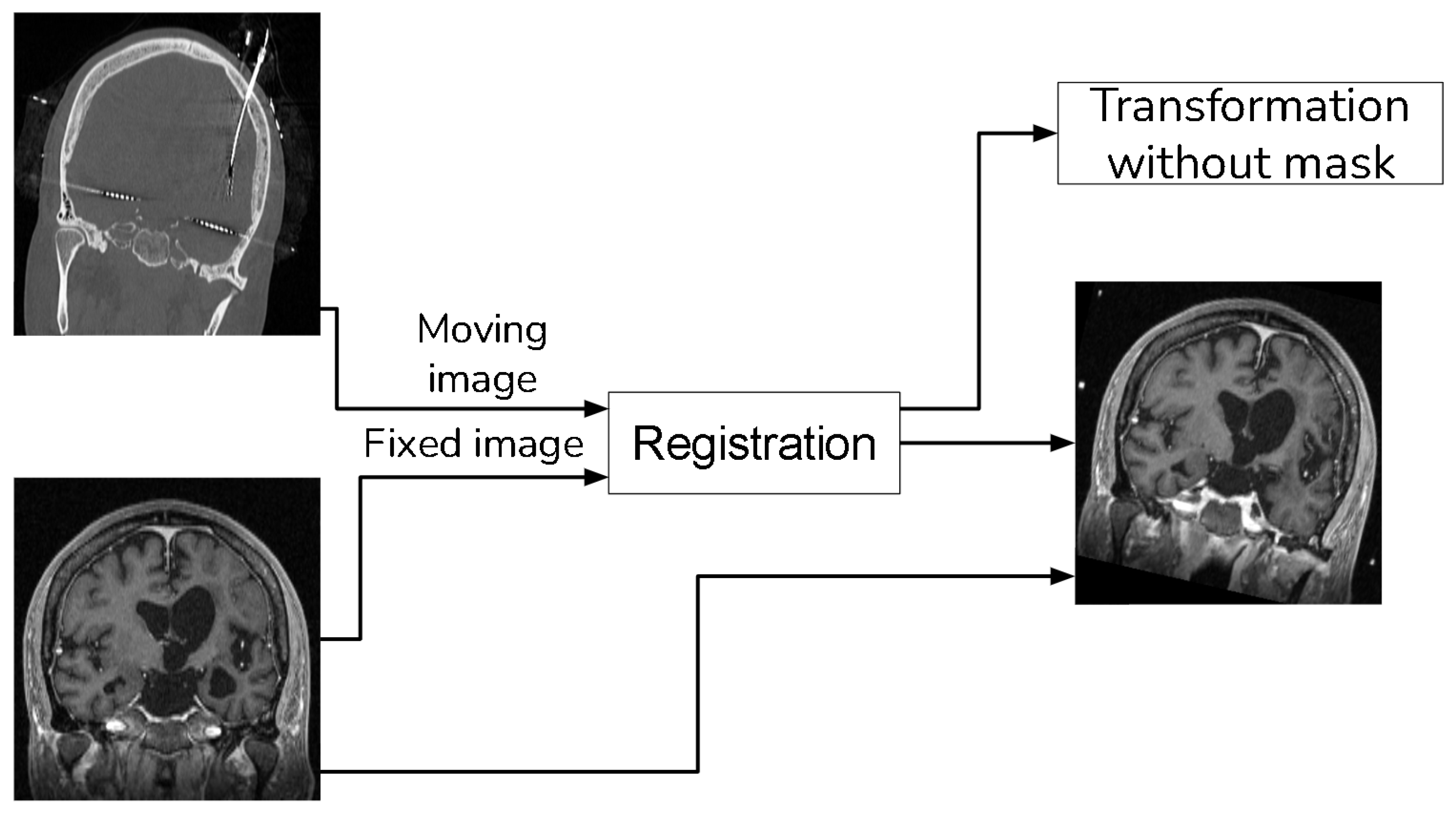

With the guidance of a medical expert, we manually localized the structures of interest, obtaining their positions in both the CT and registered MRI images using the 3D Slicer version 4.11. Upon identifying these structures, we measured the error as the Euclidean distance between the reference structure point in the CT image and the corresponding point in the registered MRI using Figure 1. For performance evaluation, we evaluated the error in images resulting from two distinct methods: (i) our proposed approach that incorporates a sampling mask during registration, and (ii) a reference method from the existing literature that conducts registration without a sampling mask. The purpose of this validation was to determine whether the use of a mask reduces registration errors. Figure 8 and Figure 9 show the methods that were compared.

Figure 8. Proposed method that uses a sampling mask for the registration.

Figure 9. Method to compare that does not use a sampling mask in the registration.

In our validation process, we also employed global fusion metrics to assess potential distortions arising from the fusion procedures. The metrics we utilized include:

- Mutual Information (MI):

Estimate the amount of information transferred from the source image into the fused image [17]. Given the input images () and the fused image , the MI can be computed using the following equation:

Measure the preservation of the structural information, separating the image into three components: luminance I, contrast C and structure S [17].

Measure the variance of the arithmetic square root [17].

The PSNR is calculated from the RMSE in the following equation given image of dimension [17]

4. Results

We validated the performance of the fusion method following the two methodologies in Section 3. We used eight pairs of CT and MRI from the RIRE dataset for the first method. The CT images were generated with the method described in Section 3.1.1. We compared the procedure shown in Figure 8 against a fusion procedure that does not employ a sampling mask of the brain tissue, which is shown in Figure 9. Both methods used a rigid registration with MI as the similarity metric and gradient descent for the optimization. We used descriptive statistical metrics of central tendency and variation to compare the methods using the validation from Section 3.1. These results were summarized in the box plot shown in Figure 10. For the second validation, we faced a limitation in the number of images available for evaluation. Given this constraint, we opted to compare the methods individually for each of the four cases. A scatter plot was chosen as the most suitable representation to visualize the error dispersion for both methods. Scatter plots are particularly effective in such scenarios as they allow for clear visualization of individual data points, making it easier to discern patterns or anomalies, especially when dealing with a smaller dataset. This approach provides a more transparent and detailed view of the distribution of errors across the limited set of images. The results of this comparison are illustrated in Figure 11.

displays the Euclidean distance between the reference points and the resulting points of the transformation from the compared methods. From the data, we can observe that the difference in the Euclidean distance for our method is significantly lower in images 3, 6, and 8. This is mainly caused by the differences in the original images that have some variations in brain tissue, as shown in Figure 12, Figure 13 and Figure 14. Due to some electrodes passing through these areas with variations, the sampling in the registration does not use these voxels to compute the transformation, thus improving the registration when the mask is used. The results are represented in Figure 10, where our method using a sampling mask yields a Euclidean distance of 1.3176 mm with a standard deviation of 0.8643. In contrast, the method without a sampling mask yields a Euclidean distance of 1.2789 mm with a standard deviation of 5.2511. These findings suggest that the use of the mask improves the registration when there is a great difference in the tissue between the MRI and CT images due to the reduction in voxel sampling of these varying tissues in the registration process.

For the second validation, we use the methodology described in Section 3.2 in four pairs of MRI and CT images obtained from Clínica Imbanaco Grupo Quirón Salud. displays the localized points in the CT image that we used as a reference in our analysis. The localized points for the structure in the MRI registered with our proposed methods are shown in , while the points in the MRI from the method to compare are displayed in .

After the structure localization, we compute the Euclidean distance between the points from the registered MRI against the points of the CT images. This process is applied the resulting images from our proposed method and the method that uses no sampling mask in the registration. The resulting Euclidean distances are shown in .

The validation results, displayed in , show that our method has a higher Euclidean distance compared to the method without a mask for images from patient 1, patient 3, and patient 4. However, our method achieves a lower Euclidean distance in images from patient 2. Further analysis of the two methods using global performance metrics, as presented in , reveals a relatively low difference, indicating minimal distortion in the images when comparing the two methods.

While the validation with the limited dataset showed some promising results, it still requires further refinement. While we were able to reduce the Euclidean distances for all structures in patient 2’s images and for some structures, such as the anterior commissure and the pineal gland, in patient 3’s images, our method displayed a higher Euclidean distance in images for patient 1 and patient 4. We employed global fusion metrics from to analyze if the difference in distance was caused by any distortion in the registration procedure. However, these metrics did not reveal any significant difference related to distortion in the registered images using any of the compared registration methods.

While the application of the mask did induce an increase in error for certain images, the implementation of our method with the mask notably reduced the average error and overall dispersion, as depicted in Figure 11. This demonstrates the promising potential of our method. However, with the limited dataset, while showing some promising results, it is evident that further refinement is necessary. To conclusively affirm the improvement introduced by our approach, a larger dataset is required for this validation methodology.

5. Discussion

Our proposed image fusion method between MRI and CT, which considers the electrodes, is useful in addressing the identified problem, where the presence of external objects produced a registration error. This approach improves the registration in the images using the RIRE dataset. However, in the second validation stage, our method demonstrated a lower average error, yet we observed instances where performance was lower when the sampling mask was applied. This could be attributed to the potential importance of information proximal to the electrode for the calculation of similarity metrics during the registration procedure. However, even in these cases, the error increase was minimal compared to scenarios where the error was lower.

Another challenge was the absence of a standardized validation method for multimodal fusion, prompting us to develop our own method using available data. This included the limited dataset from Imbanaco and the deprecated RIRE dataset.

We also found a lack of research on methods for electrode segmentation in SEEG. This necessitated the development of our own registration method, a task that was not initially included in the project scope.

All objectives of the project were achieved. The project successfully identified primary techniques for image registration and fusion between MRI and CT images, developed a method to fuse these images when external objects were present, and conducted an evaluation to measure and compare the performance of the designed method. In the evaluations, the method outperformed other existing state-of-the-art techniques in certain scenarios.

6. Conclusions

We have developed and presented an image fusion method for combining CT and MRI from SEEG exams. Our approach aims to minimize misregistration errors between pre-surgical MRI and post-surgical CT images, by the use of a sampling mask of all voxels that are not electrode in the post-surgical CT image.

We acknowledged the lack of a standard validation method for image fusion and registration in brain images, mainly when external objects are presented in one of the images. We addressed this by employing two evaluation approaches: (i) a simulation-based evaluation method with synthetic electrodes generated from the RIRE dataset; and (ii) an evaluation using four image pairs acquired from patients at Clinica Imbanaco Grupo Quirón Salud, where we measured the error using five anatomical structures that can be localized in the pre-surgical MRI and post-surgical CT images.

Our findings indicate that the proposed method outperforms the existing state-of-the-art techniques in the simulation-based evaluation using the RIRE dataset. In the evaluation using clinical images, we observed that our method demonstrated superior performance in some cases, while showing a slight decrease in performance in others. Despite this variability, the overall average Euclidean distance was lower for our method, suggesting an improvement in registration accuracy.

We recommend enhancing the second validation methodology by increasing the number of images and refining the localization of brain structures to further reduce bias in the evaluation results. This would enable a more comprehensive assessment of the proposed fusion method’s performance for clinical scenarios.

In conclusion, our proposed image fusion method shows promise for improving the accuracy of the registration in SEEG. With further development and refinement, this approach has the potential to significantly impact the field of epilepsy treatment, offering further aid in the localization of epileptogenic tissue when SEEG is employed.

References

- Hauser, W.; Hesdorffer, D.; Epilepsy Foundation of America. Epilepsy: Frequency, Causes, and Consequences; Number v. 376; Demos Medical Pub: New York, NY, USA, 1990. [Google Scholar]

- Murray, C.J.L.; Lopez, A.D.; World Health Organization. Global Comparative Assessments in the Health Sector: Disease Burden, Expenditures and Intervention Packages; Murray, C.J.L., Lopez, A.D., Eds.; World Health Organization: Geneva, Switzerland, 1994. [Google Scholar]

- Baumgartner, C.; Koren, J.P.; Britto-Arias, M.; Zoche, L.; Pirker, S. Presurgical epilepsy evaluation and epilepsy surgery. F1000Research 2019, 8. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Kwan, P. The Concept of Drug-Resistant Epileptogenic Zone. Front. Neurol. 2019, 10, 558. [Google Scholar] [CrossRef] [PubMed]

- Youngerman, B.E.; Khan, F.A.; McKhann, G.M. Stereoelectroencephalography in epilepsy, cognitive neurophysiology, and psychiatric disease: Safety, efficacy, and place in therapy. Neuropsychiatr. Dis. Treat. 2019, 15, 1701–1716. [Google Scholar] [CrossRef] [PubMed]

- Iida, K.; Otsubo, H. Stereoelectroencephalography: Indication and Efficacy. Neurol. Med.-Chir. 2017, 57, 375–385. [Google Scholar] [CrossRef] [PubMed]

- Gross, R.E.; Boulis, N.M. (Eds.) Neurosurgical Operative Atlas: Functional Neurosurgery, 3rd ed.; Thieme/AANS: Stuttgart, Germany, 2018. [Google Scholar]

- Perry, M.S.; Bailey, L.; Freedman, D.; Donahue, D.; Malik, S.; Head, H.; Keator, C.; Hernandez, A. Coregistration of multimodal imaging is associated with favourable two-year seizure outcome after paediatric epilepsy surgery. Epileptic Disord. 2017, 19, 40–48. [Google Scholar] [CrossRef] [PubMed]

- Mitchell, H.B. Image Fusion: Theories, Techniques and Applications; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Xu, R.; Chen, Y.W.; Tang, S.; Morikawa, S.; Kurumi, Y. Parzen-Window Based Normalized Mutual Information for Medical Image Registration. IEICE Trans. Inf. Syst. 2008, 91, 132–144. [Google Scholar] [CrossRef]

- Oliveira, F.P.M.; Tavares, J.M.R.S. Medical image registration: A review. Comput. Methods Biomech. Biomed. Eng. 2014, 17, 73–93. [Google Scholar] [CrossRef] [PubMed]

- Kitchenham, B.; Charters, S. Guidelines for Performing Systematic Literature Reviews in Software Engineering. 2007. Available online: https://www.elsevier.com/__data/promis_misc/525444systematicreviewsguide.pdf (accessed on 5 April 2023).

- Perez, J.; Mazo, C.; Trujillo, M.; Herrera, A. MRI and CT Fusion in Stereotactic Electroencephalography: A Literature Review. Appl. Sci. 2021, 11, 5524. [Google Scholar] [CrossRef]

- Iglesias, J.E.; Liu, C.Y.; Thompson, P.M.; Tu, Z. Robust Brain Extraction across Datasets and Comparison with Publicly Available Methods. IEEE Trans. Med. Imaging 2011, 30, 1617–1634. [Google Scholar] [CrossRef] [PubMed]

- Otsu, N. A threshold selection method from gray level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Jayakar, P.; Gotman, J.; Harvey, A.S.; Palmini, A.; Tassi, L.; Schomer, D.; Dubeau, F.; Bartolomei, F.; Yu, A.; Kršek, P.; et al. Diagnostic utility of invasive EEG for epilepsy surgery: Indications, modalities, and techniques. Epilepsia 2016, 57, 1735–1747. [Google Scholar] [CrossRef] [PubMed]

- Du, J.; Li, W.; Lu, K.; Xiao, B. An overview of multi-modal medical image fusion. Neurocomputing 2016, 215, 3–20. [Google Scholar] [CrossRef]