1. Introduction

The cornea is essential for vision and contributes three-quarters of the eye’s refractive power. While cataracts in the developing world and age-related macular degeneration (in older patients) in the developed world are recognised as the leading causes of visual impairment, corneal blindness affects all ages and is a leading cause of irreversible visual impairment [1]. Despite continuous efforts to combat this disease, infectious corneal ulceration (infectious keratitis) still receives insufficient global attention [1]. Corneal ulceration (opacity) is among the top five causes of blindness and vision impairment globally [1,2,3]. In 2020, it was reported that 2.096 million people over 40 years of age suffered from blindness, and 3.372 million people have moderate to severe vision impairment from non-trachomatous corneal opacity [2].

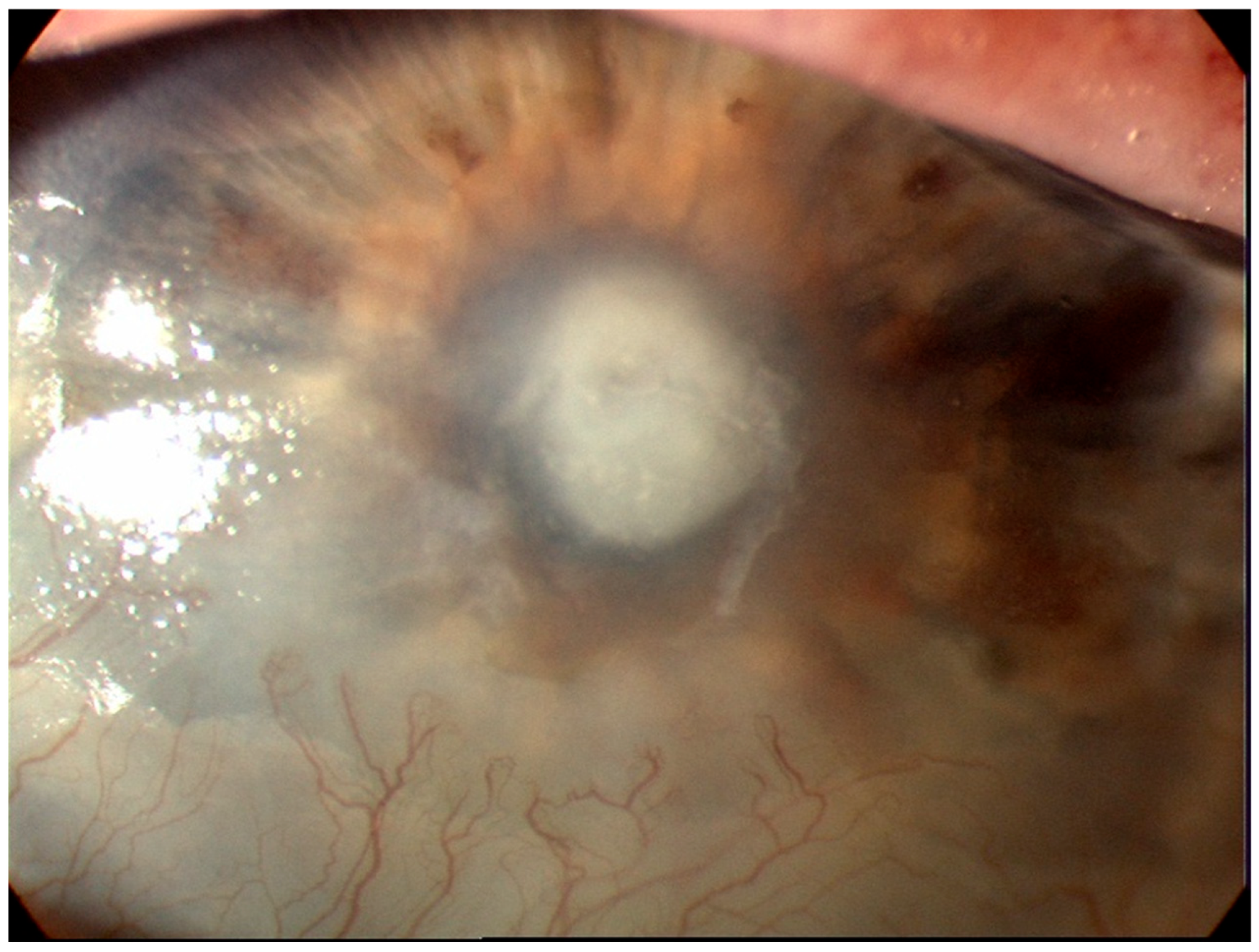

Infectious keratitis is an ocular emergency requiring prompt attention as it can progress very rapidly, leading to severe complications, such as losing eyesight or even the eye [4]. Infectious keratitis is diagnosed using the patient’s history, clinical examination under a slit lamp and the microbiology results from staining and culture of the scrapings from the corneal ulcer [4,5]. The key to making the diagnosis is the identification of key features on slit lamp examination with fluorescein staining [6,7] (Figure 1). Along with aiding with diagnosis, slit lamp biomicroscopy is used in determining the severity of the infection [8].

Figure 1. Corneal ulceration in bacterial keratitis. Note there is an infiltrate underlying an epithelial defect (Image courtesy of Prof. Stephanie Watson).

Infectious keratitis is most often caused by bacteria. Other important causal organisms include viruses, fungi and parasites [4]. Bacterial keratitis is mostly caused by Staphylococci spp., Pseudomonas aeruginosa and Streptococcus pneumoniae. Patients generally manifest with a red eye, discharge, an epithelial defect, corneal infiltrates and sometimes hypopyon [9] . Viral keratitis is commonly caused by herpes simplex virus (HSV). The type of HSV keratitis is determined based on the clinical features observed on the slit lamp examination. A dendritic or geographic ulcer is found in ‘epithelial HSV keratitis’. Stromal haze with or without ulcers, lipid keratopathy, stromal oedema, scarring, corneal thinning or vascularisation are found in ‘stromal HSV keratitis’. Stromal oedema and keratic precipitates are found in endothelial HSV keratitis. Stromal oedema, keratic precipitates and anterior chamber cells are found in ‘HSV keratouveitis’ [10,11,12] .

Fungal keratitis is caused by filamentous (Fusarium spp., Aspergillus spp.) or yeast (Candida spp.) fungi. Clinical findings may include a corneal ulcer with irregular feathery margins, elevated borders, dry, rough texture, satellite lesions, Descemet’s folds, hypopyon, ring infiltrate, endothelial plaque, anterior chamber cells and keratic precipitates [13,14,15] . Parasitic keratitis caused by Acanthamoeba spp. is a usual cause of infectious keratitis, which is a typically chronic and progressive condition. A unilateral or paracentral corneal ulcer with a ring infiltrate is commonly seen in patients with this infection. Patients may present with eyelid ptosis, conjunctival hyphemia, and pseudodendrites. As the infection progresses, deep stromal infiltrates, corneal perforation, satellite lesions, scleritis, and anterior uveitis with hypopyon may be found. Symptoms may include severe eye pain, decreased vision, foreign body sensation, photophobia, tearing and discharge [16,17,18] .

The culture of corneal scrapings is the gold standard for diagnosis and to identify and isolate the causal organism of the infection [4,5,19]. However, the positive rate of such cultures ranges from 38–66% [4]. Antimicrobial resistance testing is routinely performed on bacterial isolates, with the results typically available after 48 h. Empiric antimicrobial therapy is commenced whilst awaiting these results to prevent a severe complication [4]. Due to the limitations of corneal scraping in recent years, a range of imaging techniques have been used to aid the diagnosis of and severity grading of infectious keratitis. Understanding the current status of corneal imaging for keratitis will be of benefit to clinicians in practice and researchers in the field. Imaging technologies may enable early diagnosis of the different types of infectious keratitis. The aim of this review was to describe the corneal imaging diagnostic tests in use for infectious keratitis and to discuss the progress of artificial intelligence in diagnosing and differentiating the different types of infectious keratitis.

2. Slit Lamp Biomicroscopy

The slit lamp is a stereoscopic biomicroscope which produces a focused beam of light with different heights, widths and angles to visualise and measure the anatomy of the adnexa and anterior segment of the eye [20]. The slit lamp is essential for the examination and diagnosis of patients with infectious keratitis [4]. Slit lamp photography started in the late 1950s, but the arrival of digital cameras in the 2000s substantially facilitated its use in ophthalmology [21]. There are two types of digital cameras: single lens reflex (SLR) or ‘point-and-shoot’. The choice of either type of camera for use in clinics depends on the budget, ease of use, photographic requirements, and ability of the user. SLRs are heavier, bulkier, and more costly than ‘point-to-view’ cameras. Another key feature to consider in selecting a camera is the megapixel resolution. One megapixel is equivalent to 1 million pixels. A photograph taken at 6 megapixels can be printed up to 11 inches (28 cm) × 14 inches (35.5 cm) without ‘pixelation’ (visible pixels). A 3.2-megapixel camera can meet the needs of clinical photography [22]. Other important features to consider include whether the camera has a macro mode for close-up photography, flash mode to light a dark scene or the object to be photographed, additional flash fixtures to create diffuse illumination, and ‘image stabilization’ or ‘vibration reduction’ technology to minimise camera shake in order to avoid blurred photographs [22].

An alternative to digital cameras is the ‘smartphone’, which was released in the late 2000s. A smartphone is a mobile phone that has the technology to run many advanced applications. In ophthalmology, such applications may include patient and physician education tools, testing tools, and photography [23]. Newer smartphones have rear camera resolution of up to 50 megapixels with image sensors, lens correction and optical plus electronic image stabilisation [24]. Due to the difficulty of holding a smartphone while operating the slit lamp, adapters to mount smartphones have been developed [21]. Limitations with the use of adapters include that they are not universal; that is, they are designed for certain smartphones or slit lamp models, and when they are attached to the slit lamp, binocular operation of the slit lamp is not feasible.

To overcome these issues, Muth et al. evaluated a new adapter that could be mounted in any smartphone or slit lamp and easily moved aside to enable binocular use of the slit lamp [21]. The images taken with the smartphone had an overall high quality and were as equally as good as the images taken with a slit lamp camera [21]. Currently, smartphones have built-in cameras appropriate for slit lamp imaging. If the smartphone is placed and handled adequately, the slit lamp image quality depends on three factors: the smartphone camera sensor’s resolution, the resolution of the slit lamp or microscope and the focal length of the smartphone camera system. The final image result depends on the smartphone’s software settings including autofocus, shutter speed, and internal post-processing algorithms when a compressed image format is used (i.e: .jpg). Newer smartphones that can take images in raw format will need more software-based post-processing [21,25].

3. Optical Coherence Tomography

In 1994, it was shown that the anterior chamber could be imaged using optical coherence tomography (OCT) with the same frequency (830 nm) used in posterior chamber imaging [7,26]. This advance enabled imaging and analysis of structures, such as the cornea and the anterior chamber angle. The predominant market for OCT applications has been for retinal imaging. The first commercial anterior segment devices, known as anterior segment optical coherence tomography (AS-OCT) that became commercially available were modified posterior chamber devices. Some of these AS-OCT devices were stand-alone, and others required modifications, such as an additional lens to modify the posterior chamber OCT devices. Modifications in 2001 to the light source and lens of the AS-OCT enabled higher frequency waves (1310 nm) to allow a higher resolution of the image and more precise measurement [7,27].

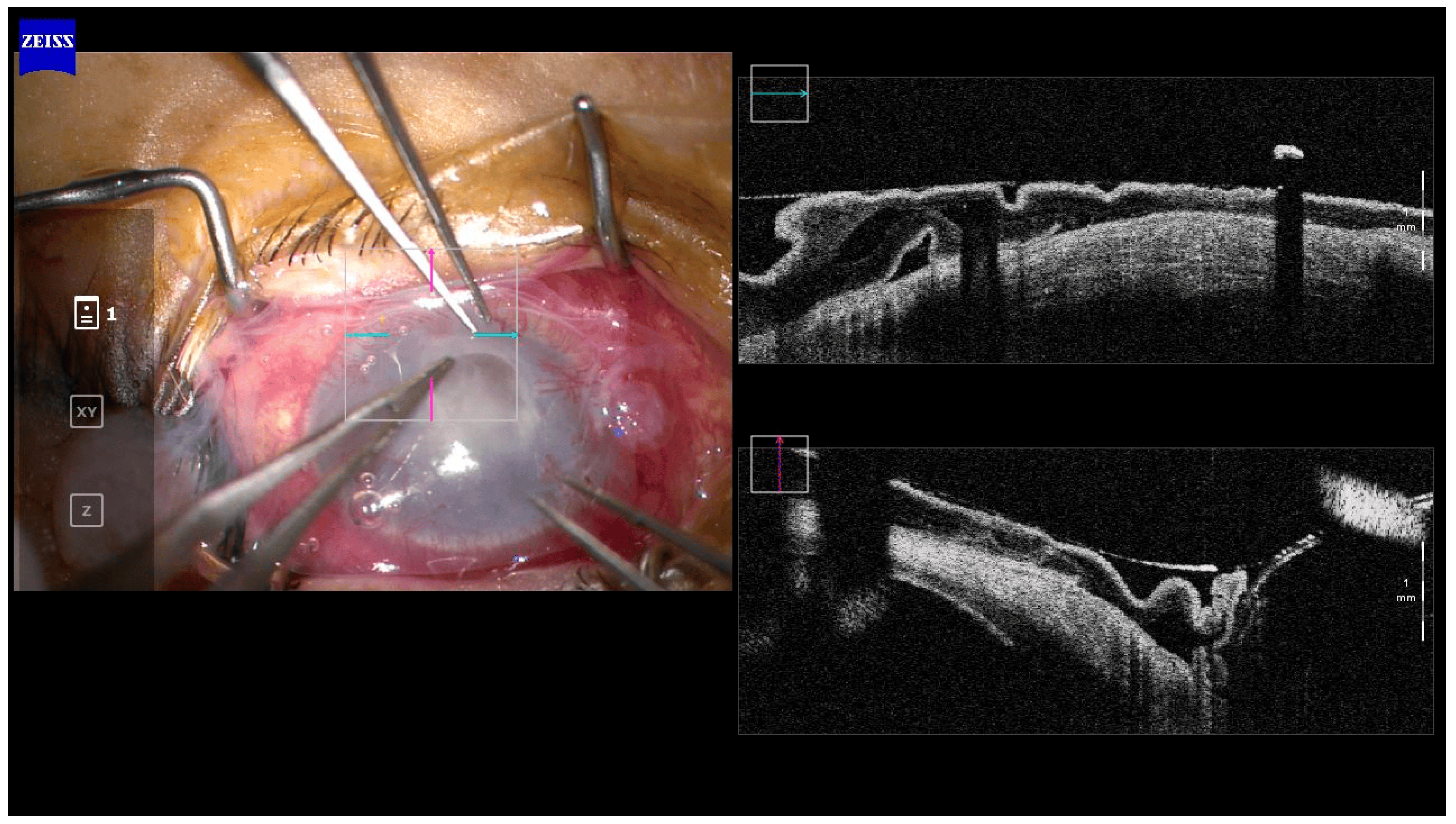

AS-OCT is now a well-recognised method for imaging the cornea and is often used in anterior chamber angle imaging for glaucoma [7,19]. The advantage of the AS-OCT is its ability to accurately measure the depth and width of the corneal ulcer, infiltrates, and haze to monitor the progress of corneal pathologies, such as superficial and deep infectious keratitis [19]. Other applications of the AS-OCT include the ability to measure the corneal thickness to determine the risk of corneal perforation, prediction of corneal cross-linking (PACK-CXL) response in infectious keratitis and highlighting corneal interface pathologies, such as interface infectious keratitis following lamellar keratoplasty (hyper-reflective band at the graft-host interface) [28], post-LASIK epithelial ingrowth (flap-host interface) [29] and valvular and direct non-traumatic corneal perforations associated with infectious keratitis [19,30]. With the increasing popularity of Small Incision Lenticule Extraction (SMILE) as a refractive procedure, AS-OCT will have a role in identifying and defining interface infections [31,32]. For bacterial keratitis, AS-OCT is currently an auxiliary tool to assist in diagnosis. Intra-operative AS-OCT is also emerging as a useful tool and may have applications in surgery for infectious keratitis by enabling delineation of involved structures and identifying corneal thinning (Figure 2).

Figure 2. Intra-operative OCT in a case of amniotic membrane transplant for a persistent epithelial defect post-microbial keratitis. Corneal thinning can be seen in the area of the defect. (Image courtesy of Prof. Stephanie Watson).

3.1. Types of AS-OCT

Optical coherence devices in clinical use can be classified according to the type of image sampling as Time domain, Spectral Domain, and Swept Source. Each class of OCT has a different sampling speed and resolution, which has implications for the application of AS-OCT.

4. In Vivo Confocal Microscopy

In vivo confocal microscopy (IVCM) provides a high-resolution, in vivo assessment of corneal structures and pathologies at a cellular and subcellular level [19]. IVCM provides corneal images with 1 µm resolution of the three cornea layers, nerves and cells and is sufficient to produce images larger than a few micrometres of filamentous fungi or Acanthamoeba cysts [4,14,15,41]. A third-generation laser scanning confocal microscope Heidelberg Retinal Tomograph (HRT3) in conjunction with the Rostock Cornea Module (RCM) (Heidelberg Engineering, Germany) utilised 670 nm red wavelength and produced high-resolution images with lateral resolution close to 1 µm, axial resolution of 7.6 µm and 400× magnification . This advance permitted the identification of yeasts, which first-generation confocal microscopes could not resolve [14,15,19,42,43].

IVCM has mainly been used in the evaluation of fungal and Acanthamoeba keratitis (AK) due to its axial limitation of 5–7 µm, which is not sufficient to detect bacteria (less than 5 µm) and viruses (in nanometres) [4,19,43]. IVCM has been a great ally to microbiological diagnostic tests as it can identify these organisms rapidly, overcoming the test’s variable positive rate of between 40–99% and a turnaround time of up to 2 weeks [19]. Therefore, IVCM is an imaging diagnostic test that is valuable in guiding initial therapy.

IVCM sensitivity ranges between 66.7% and 94%, and specificity between 78% and 100% in fungal keratitis [4,14,43,44,45,46]. Aspergillus spp. and Fusarium spp. are the main causal organisms of fungal keratitis [4,43]. With IVCM, Aspergillus spp. are identified as 5–10 µm in diameter and have septate hyphae with 45-degree angle dichotomous branches. On the other hand, Fusarium spp. typically branch at an angle of 90 degrees. In comparison, basal corneal epithelial nerves have more regular branching than hyper-reflective elements, and stromal nerves’ are between 25–50 µm in diameter versus Aspergillus spp. and Fusarium spp. diameter of 200–400 µm in length [19,43]. Yeast-like fungi (Candida spp.) can also cause keratitis with a predilection for the immunosuppressed (Figure 6); with IVCM, they appear as elongated, hyper-reflective particles resembling pseudohyphae of 10–40 µm in length and 5–10 µm in width [19,43].

Figure 6. The clinical appearance of Candida albicans keratitis in a patient on immunosuppression. The arrow is pointing to the area of infiltrate. (Image courtesy of Prof. Stephanie Watson).

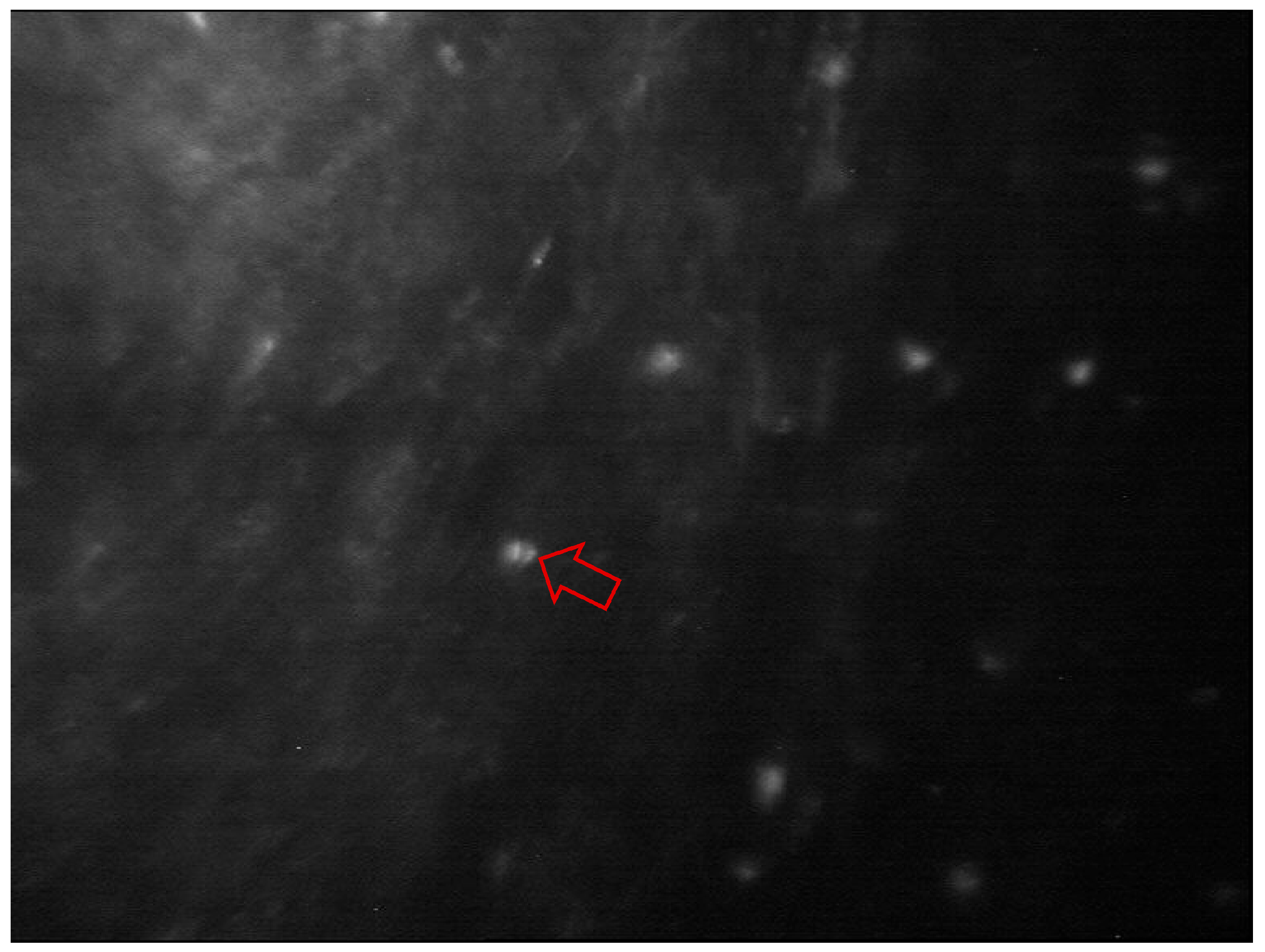

IVCM is a key diagnostic test in AK with an overall sensitivity of 80–100% and specificity of 84–100% [19,44,45,46,47,48]. Acanthamoeba spp. can present as cysts or trophozoites. Cysts (dormant form) appear as hyper-reflective, spherical and well-defined double-wall structures of ~15–30 µm in diameter in the epithelium or stroma. Trophozoites (active form) appear as hyper-reflective structures of 25–40 µm, which are difficult to discriminate from leukocytes and keratocyte nuclei [4,19,49]. Acanthamoeba spp. can also present as bright spots, signet rings and perineural infiltrates . Perineural infiltrates are a pathognomonic characteristic of AK, which appear as reflective patchy lesions with surrounding hyper-reflective spindle-shaped materials [19] (Figure 7).

Figure 7. In vivo confocal microscopy of Acanthamoeba keratitis. Perineural infiltrates appear as a reflective patchy lesion with surrounding hyperreflective spindle-shaped materials (red arrow). Image courtesy of Prof. Stephanie Watson.

The advantages of IVCM include ‘non-invasiveness’: the ability to rapidly identify in real time the causal organism and to determine the depth of the infection. This can guide the antimicrobial therapy and assist in monitoring the infection. Early identification and treatment of AK have been associated with improved prognosis [4,16]. Imaging by IVCM also facilitates longitudinal exams in the same patient, which may be of use in determining resolution and provides quantitative analysis of all cornea layers, nerves and cells to assess severity. The disadvantages of IVCM include the need for an experienced technician, patient cooperation, unsuitability for diagnosing bacteria and viruses due to axial limitation of 5–7 µm, high costs and the presence of motion artefacts. In addition, dense corneal infiltrates and/or scarring can affect proper tissue penetration and visualisation [4,14,15,41,43].

In clinical practice, typically, IVCM is performed in cases of progressive keratitis, suspicion of AK or FK, negative culture results, and in deep infections or interface infectious keratitis following corneal surgeries due to the limited access for conventional microbiological tests [4,19].

5. Artificial Intelligence—Deep Learning Methods

5.1. Background

The applications of artificial intelligence in health care are now a reality due to the advancement of computational power, refinement of learning algorithms and architectures, availability of big data and easy accessibility to deep neural networks by the public [50,51,52]. Deep learning algorithms mostly use multimedia data (images, videos and sounds) and involve the application of large-scale neural networks, such as artificial neural networks (ANN), convolutional neural networks (CNN) and recurrent neural networks (RNN) [51]. The advantage of deep CNNs is that they enable learning from data without human knowledge and the capability of processing large training data with high dimensionality [53]. A CNN model contains multiple convolutional layers, pooling layers and activation units, which are trained using model images by minimising a pre-defined loss function. A convolutional layer applies a number of filters to the input image calculated from the previous layer. This results in enhanced features at certain locations in the image. The weights in these filters are learned during the training process. A pooling layer is a dimensionality reduction operator that down-samples the input image obtained from the previous layer. Average and maximum are the most popular pooling operators, which calculate the average and max value of a local region during the image down-sampling process, respectively [53].

Some common terminologies used in AI studies are shown in [53]. The sensitivity (y-axis) and specificity (x-axis) rates are used to illustrate the receiver operating characteristic (ROC) curve and the area under the ROC curve (AUROC), which are used to determine the performance of a model at all thresholds. An AUROC of 1 is a ‘perfect’ classifier, AUROC between 0.5–1 is a real-world classifier, and an AUROC of 0.5 is a ‘poor’ classifier, which is not better than a random guess [53].

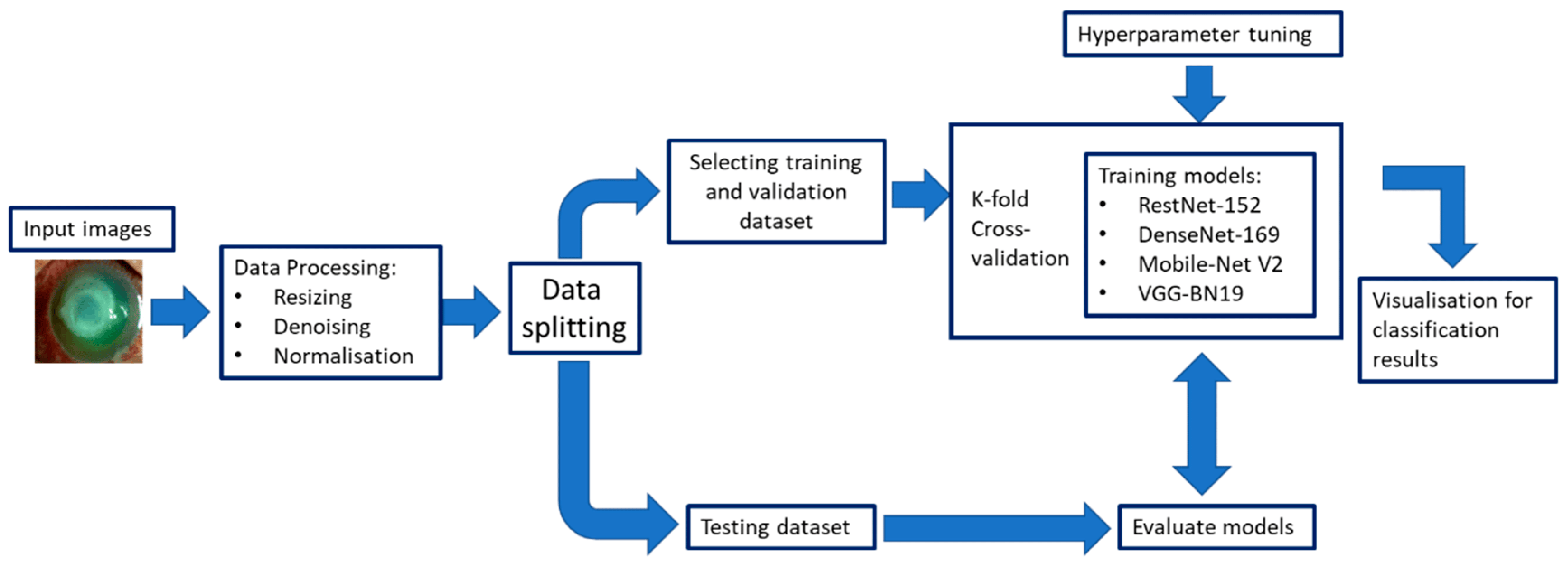

The DL CNN model training needs to consider several aspects. For instance, the quality of the training dataset is essential to the performance of the DL CNN model. Image annotation refers to the process of labelling images of a dataset to train the DL models. The image annotation is given by the clinicians and usually includes pixel-level annotation, image-level annotation or both. Further, the model may suffer from ‘over-fitting’; that is, it cannot be generalised well to new test data due to many model parameters and relatively small training examples. A validation dataset is generally used to determine the training termination point to avoid model over-fitting. Drop-out, data augmentation, and transfer learning have been used to improve the generalisability of a trained model. An independent external test set is used to evaluate the trained DL model for assessing the generalisability of the method. A lower performance normally occurs when testing on an independent test set, which is mainly due to model overfitting to the training dataset or a data distribution discrepancy between the training and testing datasets. Figure 8 illustrates an example of a framework of several DL models to diagnose infectious keratitis based on Kuo et al. [54]

Figure 8. The framework of several deep-learning models to diagnose bacterial keratitis was inspired by the framework of Kuo et al. [54].

In 2016, the use of artificial intelligence (AI) with deep learning (DL) in ophthalmology initially focused on posterior segment diseases such as diabetic retinopathy and age-related macular degeneration, but its application in diseases of the cornea, cataract and anterior chamber structures has surged in the last years [50]. Corneal AI research has focused on diseases that require corneal imaging for determining appropriate management and has utilised slit lamp photography, corneal topography and anterior segment optical coherence tomography [50]. For infectious keratitis, the use of DL with CNNs has been shown to be a potentially more accessible diagnostic method via image recognition [4,54,55]. Many studies have evaluated DL methods for diagnosing IK using images taken with a handheld camera, a camera mounted on a slit lamp or confocal microscopy [51,55,56,57,58,59,60,61,62,63,64]. Several extremely efficient DL algorithms include RestNet-152 [65], DenseNet-169 [66], Mobile-Net V2 [67] and VGG-19_BN [68]. Recent studies have used an ensemble approach; this uses many learning algorithms to perform the same classification task, yielding better validity and improved generalisation performance [61,69]. The CNN-based ensemble approach takes elements of the different CNN algorithms to build the final model. Some studies have reported that the ensemble DL model could better classify the stages of diabetic retinopathy and glaucoma using fundus photos than a single CNN model [61].

5.2. Deep Learning Models in Infectious Keratitis

shows several studies that have used DL models to diagnose IK. Li et al. developed a DL system to classify corneal images in keratitis, other corneal abnormalities and normal cornea. The authors used three DL algorithms to train an internal image dataset and had three external and smartphone datasets to externally evaluate the DL system. In terms of the smartphone dataset, the DenseNet121 algorithm elicited the best performance in classifying keratitis, other corneal abnormalities and normal cornea with an AUROC of 0.967 (95% CI, 0.955–0.977), a sensitivity of 91.9% (95% CI, 89.4–94.4) and a specificity of 96.9% (95% CI, 95.6–98.2) in detecting keratitis [70]. To differentiate the types of infectious keratitis, Redd et al. used images from handheld cameras to train five CNNs to differentiate FK from BK and compare their performance against human experience. The best-performing CNN was MobileNet, with an AUROC of 0.86. The CNNs group achieved a statistically significant higher AUROC (0.84) than the experts (0.76, p < 0.01). CNNs elicited higher accuracy for FK (81%) versus BK (75%) compared to the experts who showed more accuracy for BK (88%) versus FK (56%) [55]. Wang et al. investigated the potential of DL in classifying IK using slit lamp images and smartphone photographs. They also studied whether any information from the sclera, eyelashes and lids could improve the diagnosis of keratitis. The slit lamp images (global image) were pre-processed, excluding the irrelevant background (regional image). For the smartphone photographs, a small patch was extracted to make it look similar to the global slit lamp image; three CNNs were assessed. The InceptionV3 showed a better performance with an AUROC of 0.9588 on global images, 0.9425 on regional images and 0.8529 for smartphone images, while the two ophthalmologists reached an AUROC of 0.8050 and 0.7333, respectively. The lower performance of the smartphone images could be due to multiple factors, including the size of the dataset and the significant variation in imaging conditions (e.g., ambient light, camera brand and focus) [56].

Hung et al. used slit lamp images to train eight CNNs to identify BK and FK. The diagnostic accuracy for BK ranged from 79.6% to 95.9%, and for FK, 26.3% to 65.8%, respectively. The best-performing model was DenseNet161, with an AUROC of 0.85 for both types of keratitis. The diagnostic accuracy for BK was 87.3%, and for FK, 65.8%. [57]. Kuo et al. aimed to assess the performance of eight CNNs (four EfficientNet and four non-EfficientNet CNNs) in diagnosing BK using slit lamp images. All non-EfficientNet and EfficientNet models had no significant difference in diagnostic accuracy, ranging from 68.8% to 71.7% and an AUROC from 73.4% to 76.5% [54]. EfficientNet B3 had the best average AUROC with 74% sensitivity and 64% specificity. The diagnostic accuracy of these models (69% to 72%) was comparable to the ophthalmologists (66% to 75%) [54]. Ghosh et al. created a model called DeepKeratitis on top of three pre-trained CNNs to classify FK and BK. The CNN model VVG19 showed the highest performance with an F1 score of 0.78, precision of 0.88 and sensitivity of 0.70. Applying the ensemble learning model achieved higher performance with an F1 score of 0.83, precision of 0.91 and sensitivity of 0.77. The F1 score is a harmonic mean of precision and recall and measures a test performance, a value close to 1.0 indicates high precision and recall. The ensemble model achieved the highest AUPRC of 0.904 [62].

Hu et al. proposed a DL system with slit lamp images to automatically screen and diagnose IK (BK, FK and viral keratitis (VK)). Six CNNs were trained. The EffecientNetV2-M showed the best performance with 0.735 accuracy, 0.68 sensitivity and 0.904 specificity, which was also superior to two ophthalmologists (accuracy of 0.661 and 0.685). The overall AUROC of the EffecientNetV2-M was 0.85, with 1.00 for normal cornea, 0.87 for VK, 0.87 for FK and 0.64 for BK [71]. Kuo et al., in 2022, explored eight single and four ensemble DL models to diagnose BK caused by Pseudomonas aeruginosa. The EfficientNet B2 model reported the highest accuracy (71.2%) of the eight single-DL models, while the best ensemble 4-DL model showed the highest accuracy (72.1%) with 81% sensitivity and 51.5% specificity among the ensemble models. EfficientNetB3 had the highest specificity of 68.2%. There was no statistical difference in AUROC and diagnostic accuracy among these single-DL models and among the four best ensemble models [61]. Natarajan et al. explored the application of three DL algorithms to diagnose herpes simplex virus (HSV) stromal with ulceration keratitis using slit lamp images. DenseNet had the best performance with 72% accuracy. The AUROC was 0.73 with a sensitivity of 69.6% and specificity of 76.5% [63].

Koyama et al. developed a hybrid DL algorithm to determine the causal organism of IK by analysing slit lamp images. Facial recognition techniques were also used as they accommodate different angles, different levels of lighting and different degrees of resolution. ResNet-50 and InceptionResNetV2 were used. The final model was built based on InceptionResNetV2 using 4306 images consisting of 3994 clinical and 312 web images. This algorithm had a high overall accuracy of diagnosis: accuracy/AUROC for Acanthamoeba was 97.9%/0.995, bacteria was 90.7%/0.963, fungi was 95.0%/0.975, and HSV was 92.3%/0.946 [58]. Similarly, Zhang et al. aimed to develop a DL diagnostic model to early differentiate bacterial, fungal, viral and Acanthamoeba keratitis. KeratitisNet, the combination of ResNext101_32 × 16 d and DenseNet169 had the highest accuracy, 77.08%. The diagnostic accuracy/AUROC was 70.27%/0.86, 77.71%/0.91, 83.81%/0.96 and 79.31%/0.98 for BK, FK, AK and HSK, respectively. KeratitisNet mostly misinterpreted BK and FK images, with 20% of BK cases mispredicted into FK and 16% of FK cases into BK. The accuracy of the model was significantly higher than an ophthalmologist’s clinical diagnosis (p < 0.001) [59].

Several studies have also investigated the performance of DL CNNs methods in diagnosing FK using IVCM images [50,51]. Liu et al. proposed a novel CNN model for automatically diagnosing FK using data augmentation and image fusion. The accuracy of conventional AlexNet and VGGNet were 99.35% and 99.14%. This CNN model perfectly balanced diagnostic performance and computational complexity, improving real-time performance in diagnosing FK [72]. Lv et al. developed an intelligent system based on the DL CNN (ResNet) model to automatically diagnose FK using IVCM images. The AUROC of the system to detect hyphae was 0.9875 with an accuracy of 0.9364, sensitivity of 0.8256 and specificity of 0.9889 [73].

5.3. Future Perspectives

Up to now, the majority of studies investigating the use of AI in ophthalmology have focused on disease screening and diagnosis using existing clinical data and images based on machine learning and CNNs in conditions such as AMD, diabetic retinopathy, glaucoma and cataract [74]. For infectious keratitis diagnosis, the generation of synthetic data using generative adversarial networks (GAN) may be a new method to train AI models without the need for thousands of images from real cases used in CNNs. In the case of less common conditions like fungal or Acanthamoeba keratitis, a GAN could be utilised as a low-shot learning method via data augmentation, meaning that conventional DL models could learn less common conditions using a low number of images [75,76]. The low-shot learning technique has been used in detecting and classifying retinal diseases [77,78] and in conjunctival melanoma [75].

Another AI technology that generates synthetic data is natural language processing (NLP) models, such as ChatGPT, developed by OpenAI (San Francisco, CA, USA) [74,79]. ChatGPT utilises DL methods to generate logical text based on the user’s ‘prompt’ in layman’s terms [80]. ChatGPT was not conceived for specific tasks, such as reading images or assessing medical notes; however, OpenAI has investigated the potential use of ChatGPT in healthcare and medical applications and research. Some applications include medical note-taking and medical consultations. The medical knowledge embedded in ChatGPT may be utilised in tasks such as medical consultation, diagnosis, and education with variable accuracy [81]. For example, Delsoz et al. entered corneal medical cases (including infectious keratitis) on ChatGPT 4.0 and 3.5 to obtain a medical diagnosis, which was compared with the results from three corneal specialists. The provisional diagnosis accuracy was 85% (17 of 20 cases) for ChatGPT-4.0 and 65% for ChatGPT-3.5 versus 100% (specialist 1) and 90% (specialists 2 and 3, each) [79]. As a result, ChatGPT may be utilised to analyse clinical data along with DL models (CNNs or GAN) to diagnose and differentiate infectious keratitis.

5.4. Limitations

Despite the great advancement of DL with CNNs in the diagnosis of conditions using images, it is still challenging to achieve a satisfactory diagnostic performance in IK. Reasons for this include the large intra-class variance (difficulty in capturing the common characteristics of images in the same class), small inter-class difference (difficulty in discriminating the margin between different classes), non-standard image protocols increasing the difficulty of finding the common special features of the different types of IK [64]; difficulty in overfitting due to the lack of large scale dataset in IK [63,64], imbalance in the dataset to train models [57] and misclassification bias [62]. To overcome some of the limitations, Li et al. developed a novel CNN called Class Aware Attention Network to diagnose IK using slit lamp images. In this network, a class-aware classification module is first trained to learn class-related discriminative features using separate branches for each class. Next, the learned class-aware discriminative features are fed into the main branch and fused with other feature maps using two attention strategies to assist the final multi-class classification performance [64]. This method showed a higher accuracy (0.70) and specificity (0.89) compared to eight single CNNs. This innovative CNN can extract the fine features of keratitis lesions, which are not easy for clinicians to identify [64]. Further, there is a wide range of causal organisms in keratitis and varied clinical presentations with regional differences in both [4,58,82,83]. Improving image resolution, increasing the number of images for the training models, and optimising the parameters of the algorithms may enhance the accuracy of the models [51,72,73]. Future studies will be needed to validate the findings of CNN approaches to the diagnosis of IK in a range of global settings with a variety of devices (slit lamp microscopy and camera) [73].

6. Conclusions

Infectious keratitis is among the top five leading causes of blindness worldwide. Early diagnosis of the causal organism is crucial to guide management to avoid severe complications, such as vision impairment and blindness. Clinical examination under the slit lamp is essential for diagnosing the infection, and the culture of corneal scrapes is still the gold standard in the identification and isolation of the causal organism. Slit lamp photography was revolutionised by the invention of digital cameras and smartphones. Slit lamp image quality essentially depends on the digital camera or smartphone camera sensor’s resolution, the resolution of the slit lamp and the focal length of the smartphone camera system. An image of six megapixels is sufficient in clinical photography. Alternative diagnostic tools using imaging devices, such as OCT and IVCM, can track the progression of the infection and may identify the causal organism(s). The overall sensitivity and specificity of IVCM for fungal keratitis are 66–74% and 78–100%, and for Acanthamoeba keratitis, they are 80–100% and 84–100%.

Due to the advancement of AI technology, many study groups worldwide have evaluated the role of DL-CNN models to diagnose the different types of IK using either corneal photographs taken with smartphones or slit lamp cameras or confocal images with variable promising results. Nevertheless, there are some challenges to overcome to use this technology in health settings, including non-standard imaging protocols, difficulty in finding the common special features of the different types of IK, cost, accessibility and cost-effectiveness. More recent DL models, such as GAN, may help in overcoming these limitations by generating synthetic data to train the models. Moreover, natural language processing models, such as ChatGTP, may analyse clinical data. These results, along with GAN results, may assist in diagnosing IK and differentiating the types of IK. Further work is needed to examine and validate the clinical performance of these CNNs models in real-world healthcare settings with multiethnicity populations to increase the generalisability of the model. Finally, it would also be valuable to evaluate whether this technology assists in improving patient clinical outcomes via prospective clinical trials.

7. The Literature Search

We conducted a Pubmed and Ovid Medline search of the literature from 2010 to 2022. The terms used covered a wide spectrum of terms associated with corneal imaging in infectious keratitis, including cornea, corneal ulcer, microbial keratitis, infectious keratitis, bacterial keratitis, fungal keratitis, HSV keratitis, viral keratitis, herpetic keratitis, optical coherence tomography, deep learning, convolutional neural networks, slit lamp images, artificial intelligence, computer vision, machine learning and in vivo confocal microscopy. Reference lists from the recovered articles were also used to identify cases that may not have been included in our initial search.

References

- Ung, L.; Acharya, N.R.; Agarwal, T.; Alfonso, E.C.; Bagga, B.; Bispo, P.J.; Burton, M.J.; Dart, J.K.; Doan, T.; Fleiszig, S.M.; et al. Infectious corneal ulceration: A proposal for neglected tropical disease status. Bull. World Health Organ. 2019, 97, 854–856. [Google Scholar] [CrossRef] [PubMed]

- Wang, E.Y.; Kong, X.; Wolle, M.; Gasquet, N.; Ssekasanvu, J.; Mariotti, S.P.; Bourne, R.; Taylor, H.; Resnikoff, S.; West, S. Global trends in blindness and vision impairment resulting from corneal opacity 1984–2020: A meta-analysis. Ophthalmology 2023, 130, 863–871. [Google Scholar] [CrossRef] [PubMed]

- Flaxman, S.R.; Bourne, R.R.A.; Resnikoff, S.; Ackland, P.; Braithwaite, T.; Cicinelli, M.V.; Das, A.; Jonas, J.B.; Keeffe, J.; Kempen, J.H.; et al. Global causes of blindness and distance vision impairment 1990–2020: A systematic review and meta-analysis. Lancet Glob. Health 2017, 5, e1221–e1234. [Google Scholar] [CrossRef] [PubMed]

- Cabrera-Aguas, M.; Khoo, P.; Watson, S.L. Infectious keratitis: A review. Clin. Exp. Ophthalmol. 2022, 50, 543–562. [Google Scholar] [CrossRef]

- Ngo, J.; Khoo, P.; Watson, S.L. Improving the efficiency and the technique of the corneal scrape procedure via an evidence based instructional video at a quaternary referral eye hospital. Curr. Eye Res. 2020, 45, 529–534. [Google Scholar] [CrossRef]

- Ting, D.S.J.; Ho, C.S.; Cairns, J.; Elsahn, A.; Al-Aqaba, M.; Boswell, T.; Said, D.G.; Dua, H.S. 12-year analysis of incidence, microbiological profiles and in vitro antimicrobial susceptibility of infectious keratitis: The nottingham infectious keratitis study. Br. J. Ophthalmol. 2021, 105, 328–333. [Google Scholar] [CrossRef]

- Maberly, J. Evaluating Severity of Microbial Keratitis Using Optical Coherence Tomography. Ph.D. Thesis, The University of Sidney, Sidney, Australia, 2021. [Google Scholar]

- Allan, B.D.; Dart, J.K. Strategies for the management of microbial keratitis. Br. J. Ophthalmol. 1995, 79, 777–786. [Google Scholar] [CrossRef]

- Khoo, P.; McCluskey, P.; Cabrera-Aguas, M.; Watson, S.L. Bacterial eye infections. In Encyclopedia of Infection and Immunity; Rezaei, N., Ed.; Elsevier: Oxford, UK, 2022; pp. 204–218. [Google Scholar]

- Cabrera-Aguas, M.; Khoo, P.; McCluskey, P.; Watson, S.L. Viral ocular infections. In Encyclopedia of Infection and Immunity; Rezaei, N., Ed.; Elsevier: Oxford, UK, 2022; pp. 219–233. [Google Scholar]

- White, M.L.; Chodosh, J. Herpes Simplex Virus Keratitis: A Treatment Guideline; Hoskins Center for Quality Eye Care, American Academy of Ophthalmology: San Francisco, CA, USA, 2014. [Google Scholar]

- Azher, T.N.; Yin, X.T.; Tajfirouz, D.; Huang, A.J.; Stuart, P.M. Herpes simplex keratitis: Challenges in diagnosis and clinical management. Clin. Ophthalmol. 2017, 11, 185–191. [Google Scholar] [CrossRef]

- Thomas, P.A. Current perspectives on ophthalmic mycoses. Clin. Microbiol. Rev. 2003, 16, 730–797. [Google Scholar] [CrossRef]

- Maharana, P.; Sharma, N.; Nagpal, R.; Jhanji, V.; Das, S.; Vajpayee, R. Recent advances in diagnosis and management of mycotic keratitis. Indian J. Ophthalmol. 2016, 64, 346–357. [Google Scholar]

- Cabrera-Aguas, M.; Khoo, P.; McCluskey, P.; Watson, S.L. Fungal ocular infections. In Encyclopedia of Infection and Immunity; Rezaei, N., Ed.; Elsevier: Oxford, UK, 2022; pp. 234–245. [Google Scholar]

- Dart, J.K.; Saw, V.P.; Kilvington, S. Acanthamoeba keratitis: Diagnosis and treatment update 2009. Am. J. Ophthalmol. 2009, 148, 487–499.e2. [Google Scholar] [CrossRef] [PubMed]

- Höllhumer, R.; Keay, L.; Watson, S.L. Acanthamoeba keratitis in australia: Demographics, associated factors, presentation and outcomes: A 15-year case review. Eye 2020, 34, 725–732. [Google Scholar] [CrossRef] [PubMed]

- Khoo, P.; Cabrera-Aguas, M.; Watson, S.L. Parasitic eye infections. In Encyclopedia of Infection and Immunity; Rezaei, N., Ed.; Elsevier: Oxford, UK, 2022; pp. 246–258. [Google Scholar]

- Ting, D.S.J.; Gopal, B.P.; Deshmukh, R.; Seitzman, G.D.; Said, D.G.; Dua, H.S. Diagnostic armamentarium of infectious keratitis: A comprehensive review. Ocul. Surf. 2022, 23, 27–39. [Google Scholar] [CrossRef] [PubMed]

- Wiki, A.A.o.O.-E. Slit Lamp Examination. Available online: https://eyewiki.aao.org/Slit_Lamp_Examination (accessed on 29 September 2023).

- Muth, D.R.; Blaser, F.; Foa, N.; Scherm, P.; Mayer, W.J.; Barthelmes, D.; Zweifel, S.A. Smartphone slit lamp imaging-usability and quality assessment. Diagnostics 2023, 13, 423. [Google Scholar] [CrossRef]

- Mukherjee, B.; Nair, A.G. Principles and practice of external digital photography in ophthalmology. Indian J. Ophthalmol. 2012, 60, 119–125. [Google Scholar] [CrossRef]

- Chhablani, J.; Kaja, S.; Shah, V.A. Smartphones in ophthalmology. Indian J. Ophthalmol. 2012, 60, 127. [Google Scholar]

- Store, G. Pixel 8 Specifications. Available online: https://store.google.com/au/product/pixel_8_specs?hl=en-US&pli=1 (accessed on 29 September 2023).

- Roy, S.; Pantanowitz, L.; Amin, M.; Seethala, R.R.; Ishtiaque, A.; Yousem, S.A.; Parwani, A.V.; Cucoranu, l.; Hartman, D.J. Smartphone adapters for digital photomicrography. J. Pathol. Inform. 2014, 5, 24. [Google Scholar] [CrossRef]

- Konstantopoulos, A.; Yadegarfar, G.; Fievez, M.; Anderson, D.F.; Hossain, P. In vivo quantification of bacterial keratitis with optical coherence tomography. Invest. Ophthalmol. Vis. Sci. 2011, 52, 1093–1097. [Google Scholar] [CrossRef]

- Radhakrishnan, S.; Rollins, A.M.; Roth, J.E.; Yazdanfar, S.; Westphal, V.; Bardenstein, D.S.; Izatt, J.A. Real-time optical coherence tomography of the anterior segment at 1310 nm. Arch. Ophthalmol. 2001, 119, 1179–1185. [Google Scholar] [CrossRef]

- Ting, D.S.J.; Said, D.G.; Dua, H.S. Interface haze after descemet stripping automated endothelial keratoplasty. JAMA Ophthalmol. 2019, 137, 1201–1202. [Google Scholar] [CrossRef]

- Darren Shu Jeng, T.; Sathish, S.; Jean-Pierre, D. Epithelial ingrowth following laser in situ keratomileusis (LASIK): Prevalence, risk factors, management and visual outcomes. BMJ Open. Ophthalmol. 2018, 3, e000133. [Google Scholar]

- Almaazmi, A.; Said, D.G.; Messina, M.; Alsaadi, A.; Dua, H.S. Mechanism of fluid leak in non-traumatic corneal perforations: An anterior segment optical coherence tomography study. Br. J. Ophthalmol. 2020, 104, 1304–1309. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Ren, S.W.; Dai, L.J.; Zhang, B.; Gu, Y.W.; Pang, C.J.; Wang, Y. Bacterial keratitis following small incision lenticule extraction. Infect. Drug. Resist. 2022, 15, 4585–4593. [Google Scholar] [CrossRef] [PubMed]

- Ganesh, S.; Brar, S.; Nagesh, B.N. Management of infectious keratitis following uneventful small-incision lenticule extraction using a multimodal approach—A case report. Indian J. Ophthalmol. 2020, 68, 3064. [Google Scholar] [PubMed]

- Geevarghese, A.; Wollstein, G.; Ishikawa, H.; Schuman, J.S. Optical coherence tomography and glaucoma. Annu. Rev. Vis. Sci. 2021, 7, 693–726. [Google Scholar] [CrossRef]

- Unterhuber, A.; Povazay, B.; Bizheva, K.; Hermann, B.; Sattmann, H.; Stingl, A.; Le, T.; Seefeld, M.; Menzel, R.; Preusser, M.; et al. Advances in broad bandwidth light sources for ultrahigh resolution optical coherence tomography. Phys. Med. Biol. 2004, 49, 1235–1246. [Google Scholar] [CrossRef]

- Soliman, W.; Fathalla, A.M.; El-Sebaity, D.M.; Al-Hussaini, A.K. Spectral domain anterior segment optical coherence tomography in microbial keratitis. Graefe’s Arch. Clin. Exp. Ophthalmol. 2013, 251, 549–553. [Google Scholar] [CrossRef]

- Yamazaki, N.; Kobayashi, A.; Yokogawa, H.; Ishibashi, Y.; Oikawa, Y.; Tokoro, M.; Sugiyama, K. In vivo imaging of radial keratoneuritis in patients with acanthamoeba keratitis by anterior-segment optical coherence tomography. Ophthalmology 2014, 121, 2153–2158. [Google Scholar] [CrossRef]

- Oliveira, M.A.; Rosa, A.; Soares, M.; Gil, J.; Costa, E.; Quadrado, M.J.; Murta, J. Anterior segment optical coherence tomography in the early management of microbial keratitis: A cross-sectional study. Acta Med. Port. 2020, 33, 318–325. [Google Scholar] [CrossRef]

- Schuman, J.S. Spectral domain optical coherence tomography for glaucoma (an aos thesis). Trans. Am. Ophthalmol. Soc. 2008, 106, 426–458. [Google Scholar]

- Adhi, M.; Liu, J.J.; Qavi, A.H.; Grulkowski, I.; Lu, C.D.; Mohler, K.J.; Ferrara, D.; Kraus, M.F.; Baumal, C.R.; Witkin, A.J.; et al. Choroidal analysis in healthy eyes using swept-source optical coherence tomography compared to spectral domain optical coherence tomography. Am. J. Ophthalmol. 2014, 157, 1272–1281.e1. [Google Scholar] [CrossRef] [PubMed]

- Kostanyan, T.; Wollstein, G.; Schuman, J.S. Evaluating glaucoma damage: Emerging imaging technologies. Expert Rev. Ophthalmol. 2015, 10, 183–195. [Google Scholar] [CrossRef] [PubMed]

- Donovan, C.; Arenas, E.; Ayyala, R.S.; Margo, C.E.; Espana, E.M. Fungal keratitis: Mechanisms of infection and management strategies. Surv. Ophthalmol. 2021, 67, 758–769. [Google Scholar] [CrossRef] [PubMed]

- Brasnu, E.; Bourcier, T.; Dupas, B.; Degorge, S.; Rodallec, T.; Laroche, L.; Borderie, V.; Baudouin, C. In vivo confocal microscopy in fungal keratitis. Br. J. Ophthalmol. 2007, 91, 588–591. [Google Scholar] [CrossRef]

- Kumar, R.L.; Cruzat, A.; Hamrah, P. Current state of in vivo confocal microscopy in management of microbial keratitis. Semin. Ophthalmol. 2010, 25, 166–170. [Google Scholar] [CrossRef]

- Kanavi, M.R.; Javadi, M.; Yazdani, S.; Mirdehghanm, S. Sensitivity and specificity of confocal scan in the diagnosis of infectious keratitis. Cornea 2007, 26, 782–786. [Google Scholar] [CrossRef]

- Wang, Y.E.; Tepelus, T.C.; Vickers, L.A.; Baghdasaryan, E.; Gui, W.; Huang, P.; Irvine, J.A.; Sadda, S.; Hsu, H.Y.; Lee, O.L. Role of in vivo confocal microscopy in the diagnosis of infectious keratitis. Int. Ophthalmol. 2019, 39, 2865–2874. [Google Scholar] [CrossRef]

- Vaddavalli, P.K.; Garg, P.; Sharma, S.; Sangwan, V.S.; Rao, G.N.; Thomas, R. Role of confocal microscopy in the diagnosis of fungal and acanthamoeba keratitis. Ophthalmology 2011, 118, 29–35. [Google Scholar] [CrossRef]

- Goh, J.W.Y.; Harrison, R.; Hau, S.; Alexander, C.L.; Tole, D.M.; Avadhanam, V.S. Comparison of in vivo confocal microscopy, pcr and culture of corneal scrapes in the diagnosis of acanthamoeba keratitis. Cornea 2018, 37, 480–485. [Google Scholar] [CrossRef]

- Chidambaram, J.D.; Prajna, N.V.; Larke, N.L.; Palepu, S.; Lanjewar, S.; Shah, M.; Elakkiya, S.; Lalitha, P.; Carnt, N.; Vesaluoma, M.H.; et al. Prospective study of the diagnostic accuracy of the in vivo laser scanning confocal microscope for severe microbial keratitis. Ophthalmology 2016, 123, 2285–2293. [Google Scholar] [CrossRef]

- Villani, E.; Baudouin, C.; Efron, N.; Hamrah, P.; Kojima, T.; Patel, S.V.; Pflugfelder, S.C.; Zhivov, A.; Dogru, M. In vivo confocal microscopy of the ocular surface: From bench to bedside. Curr. Eye Res. 2014, 39, 213–231. [Google Scholar] [CrossRef] [PubMed]

- Ting, D.S.J.; Foo, V.H.; Yang, L.W.Y.; Sia, J.T.; Ang, M.; Lin, H.; Chodosh, J.; Mehta, J.S.; Ting, D.S.W. Artificial intelligence for anterior segment diseases: Emerging applications in ophthalmology. Br. J. Ophthalmol. 2021, 105, 158–168. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Wang, Y.; Zhang, H.; Samusak, A.; Rao, H.; Xiao, C.; Abula, M.; Cao, Q.; Dai, Q. Artificial intelligence-assisted diagnosis of ocular surface diseases. Front. Cell. Dev. Biol. 2023, 11, 1133680. [Google Scholar] [CrossRef] [PubMed]

- Buisson, M.; Navel, V.; Labbe, A.; Watson, S.L.; Baker, J.S.; Murtagh, P.; Chiambaretta, F.; Dutheil, F. Deep learning versus ophthalmologists for screening for glaucoma on fundus examination: A systematic review and meta-analysis. Clin. Exp. Ophthalmol. 2021, 49, 1027–1038. [Google Scholar] [CrossRef]

- Rampat, R.; Deshmukh, R.; Chen, X.; Ting, D.S.W.; Said, D.G.; Dua, H.S.; Ting, D.S.J. Artificial intelligence in cornea, refractive surgery, and cataract: Basic principles, clinical applications, and future directions. Asia Pac. J. Ophthalmol. 2021, 10, 268–281. [Google Scholar] [CrossRef]

- Kuo, M.-T.; Hsu, B.W.-Y.; Lin, Y.-S.; Fang, P.-C.; Yu, H.-J.; Chen, A.; Yu, M.-S.; Tseng, V.S. Comparisons of deep learning algorithms for diagnosing bacterial keratitis via external eye photographs. Sci. Rep. 2021, 11, 24227. [Google Scholar] [CrossRef]

- Redd, T.K.; Prajna, N.V.; Srinivasan, M.; Lalitha, P.; Krishnan, T.; Rajaraman, R.; Venugopal, A.; Acharya, N.; Seitzman, G.D.; Lietman, T.M.; et al. Image-based differentiation of bacterial and fungal keratitis using deep convolutional neural networks. Ophthalmol. Sci. 2022, 2, 100119. [Google Scholar] [CrossRef]

- Wang, L.; Chen, K.; Wen, H.; Zheng, Q.; Chen, Y.; Pu, J.; Chen, W. Feasibility assessment of infectious keratitis depicted on slit-lamp and smartphone photographs using deep learning. Int. J. Med. Inform. 2021, 155, 104583. [Google Scholar] [CrossRef]

- Hung, N.; Shih, A.K.; Lin, C.; Kuo, M.-T.; Hwang, Y.-S.; Wu, W.-C.; Kuo, C.-F.; Kang, E.Y.; Hsiao, C.-H. Using slit-lamp images for deep learning-based identification of bacterial and fungal keratitis: Model development and validation with different convolutional neural networks. Diagnostics 2021, 11, 1246. [Google Scholar] [CrossRef]

- Koyama, A.; Miyazaki, D.; Nakagawa, Y.; Ayatsuka, Y.; Miyake, H.; Ehara, F.; Sasaki, S.I.; Shimizu, Y.; Inoue, Y. Determination of probability of causative pathogen in infectious keratitis using deep learning algorithm of slit-lamp images. Sci. Rep. 2021, 11, 22642. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, H.; Wang, S.; Wei, Z.; Zhang, Y.; Wang, Z.; Chen, K.; Ou, Z.; Liang, Q. Deep learning-based classification of infectious keratitis on slit-lamp images. Ther. Adv. Chronic Dis. 2022, 13, 20406223221136071. [Google Scholar] [CrossRef] [PubMed]

- Kuo, M.-T.; Hsu, B.W.-Y.; Yin, Y.-K.; Fang, P.-C.; Lai, H.-Y.; Chen, A.; Yu, M.-S.; Tseng, V.S. A deep learning approach in diagnosing fungal keratitis based on corneal photographs. Sci. Rep. 2020, 10, 14424. [Google Scholar] [CrossRef] [PubMed]

- Kuo, M.T.; Hsu, B.W.; Lin, Y.S.; Fang, P.C.; Yu, H.J.; Hsiao, Y.T.; Tseng, V.S. Deep learning approach in image diagnosis of pseudomonas keratitis. Diagnostics 2022, 12, 2948. [Google Scholar] [CrossRef] [PubMed]

- Ghosh, A.K.; Thammasudjarit, R.; Jongkhajornpong, P.; Attia, J.; Thakkinstian, A. Deep learning for discrimination between fungal keratitis and bacterial keratitis: Deepkeratitis. Cornea 2022, 41, 616–622. [Google Scholar] [CrossRef] [PubMed]

- Natarajan, R.; Matai, H.D.; Raman, S.; Kumar, S.; Ravichandran, S.; Swaminathan, S.; Rani Alex, J.S. Advances in the diagnosis of herpes simplex stromal necrotising keratitis: A feasibility study on deep learning approach. Indian J. Ophthalmol. 2022, 70, 3279–3283. [Google Scholar] [CrossRef]

- Li, J.; Wang, S.; Hu, S.; Sun, Y.; Wang, Y.; Xu, P.; Ye, J. Class-aware attention network for infectious keratitis diagnosis using corneal photographs. Comput. Biol. Med. 2022, 151 Pt A, 106301. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Ganaie, M.A.; Hu, M.; Malik, A.K.; Tanveer, M.; Suganthan, P.N. Ensemble deep learning: A review. Eng. Appl. Artif. Intell. 2022, 115, 105151. [Google Scholar] [CrossRef]

- Li, Z.; Jiang, J.; Chen, K.; Chen, Q.; Zheng, Q.; Liu, X.; Weng, H.; Wu, S.; Chen, W. Preventing corneal blindness caused by keratitis using artificial intelligence. Nat. Commun. 2021, 12, 3738. [Google Scholar] [CrossRef]

- Hu, S.; Sun, Y.; Li, J.; Xu, P.; Xu, M.; Zhou, Y.; Wang, Y.; Wang, S.; Ye, J. Automatic diagnosis of infectious keratitis based on slit lamp images analysis. J. Pers. Med. 2023, 13, 519. [Google Scholar] [CrossRef]

- Liu, Z.; Cao, Y.; Li, Y.; Xiao, X.; Qiu, Q.; Yang, M.; Zhao, Y.; Cui, L. Automatic diagnosis of fungal keratitis using data augmentation and image fusion with deep convolutional neural network. Comput. Methods Programs Biomed. 2020, 187, 105019. [Google Scholar] [CrossRef] [PubMed]

- Lv, J.; Zhang, K.; Chen, Q.; Chen, Q.; Huang, W.; Cui, L.; Li, M.; Li, J.; Chen, L.; Shen, C.; et al. Deep learning-based automated diagnosis of fungal keratitis with in vivo confocal microscopy images. Ann. Transl. Med. 2020, 8, 706. [Google Scholar] [CrossRef] [PubMed]

- Choi, J.Y.; Yoo, T.K. New era after ChatGPT in ophthalmology: Advances from data-based decision support to patient-centered generative artificial intelligence. Ann. Transl. Med. 2023, 11, 337. [Google Scholar] [CrossRef] [PubMed]

- Yoo, T.K.; Choi, J.Y.; Kim, H.K.; Ryu, I.H.; Kim, J.K. Adopting low-shot deep learning for the detection of conjunctival melanoma using ocular surface images. Comput. Methods Programs Biomed. 2021, 205, 106086. [Google Scholar] [CrossRef]

- Jadon, S. An overview of deep learning architectures in few-shot learning domain. arXiv 2020, arXiv:2008.06365. [Google Scholar]

- Quellec, G.; Lamard, M.; Conze, P.-H.; Massin, P.; Cochener, B. Automatic detection of rare pathologies in fundus photographs using few-shot learning. Med. Image Anal. 2020, 61, 101660. [Google Scholar] [CrossRef]

- Burlina, P.; Paul, W.; Mathew, P.; Joshi, N.; Pacheco, K.D.; Bressler, N.M. Low-shot deep learning of diabetic retinopathy with potential applications to address artificial intelligence bias in retinal diagnostics and rare ophthalmic diseases. JAMA Ophthalmol. 2020, 138, 1070–1077. [Google Scholar] [CrossRef]

- Delsoz, M.; Madadi, Y.; Munir, W.M.; Tamm, B.; Mehravaran, S.; Soleimani, M.; Djalilian, A.; Yousefi, S. Performance of chatgpt in diagnosis of corneal eye diseases. medRxiv 2023. [Google Scholar] [CrossRef]

- Lee, P.; Bubeck, S.; Petro, J. Benefits, limits, and risks of GPT-4 as an AI Chatbot for medicine. N. Engl. J. Med. 2023, 388, 1233–1239. [Google Scholar] [CrossRef]

- Singh, S.; Watson, S. Chatgpt as a tool for conducting literature review for dry eye disease. Clin. Exp. Ophthalmol. 2023, 51, 731–732. [Google Scholar] [CrossRef]

- Bartimote, C.; Foster, J.; Watson, S.L. The spectrum of microbial keratitis: An updated review. Open Ophthalmol. J. 2019, 13, 100–130. [Google Scholar] [CrossRef]

- Karsten, E.; Watson, S.L.; Foster, L.J. Diversity of microbial species implicated in keratitis: A review. Open Ophthalmol. J. 2012, 6, 110–124. [Google Scholar] [CrossRef] [PubMed]